> xidianwangtao@gmail.com

关于TensorFlow Serving

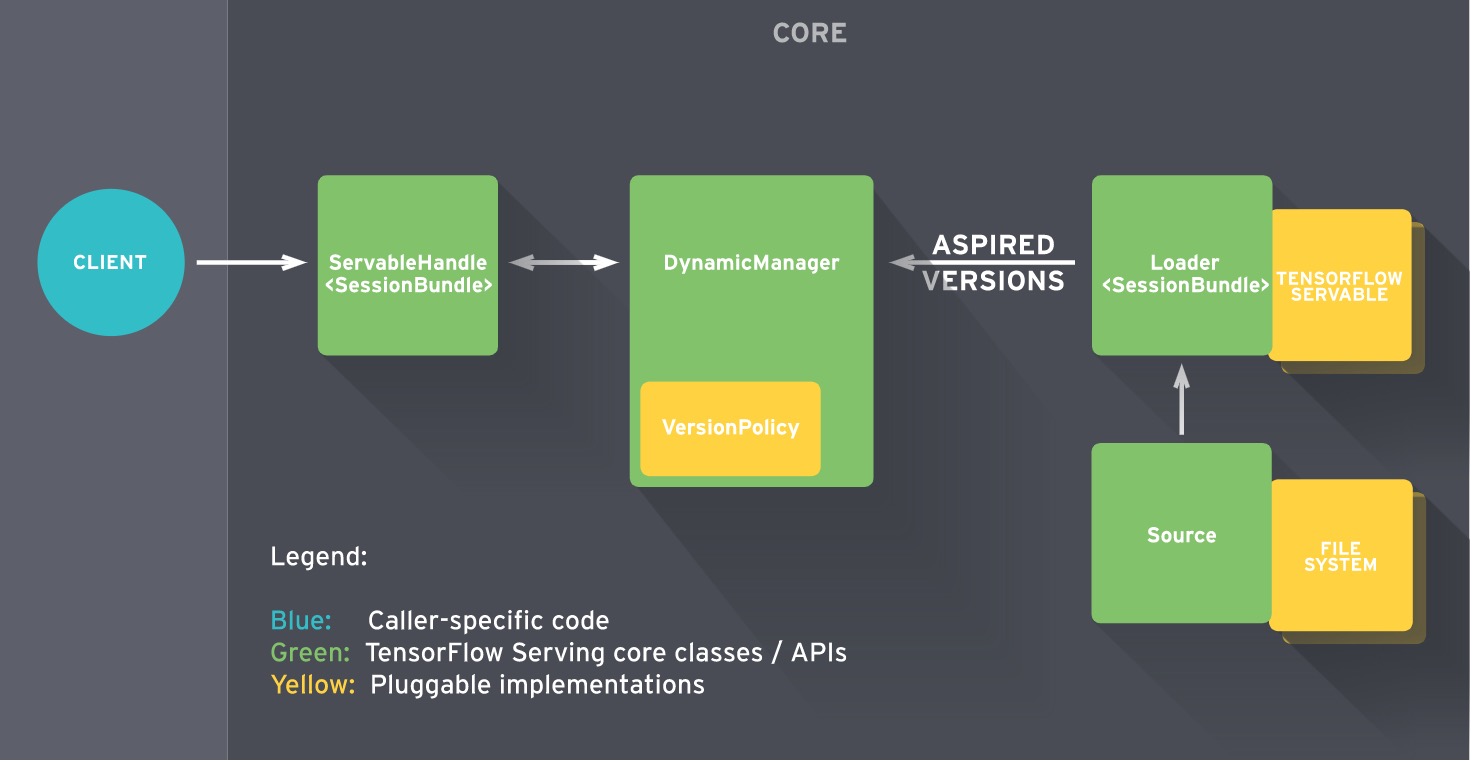

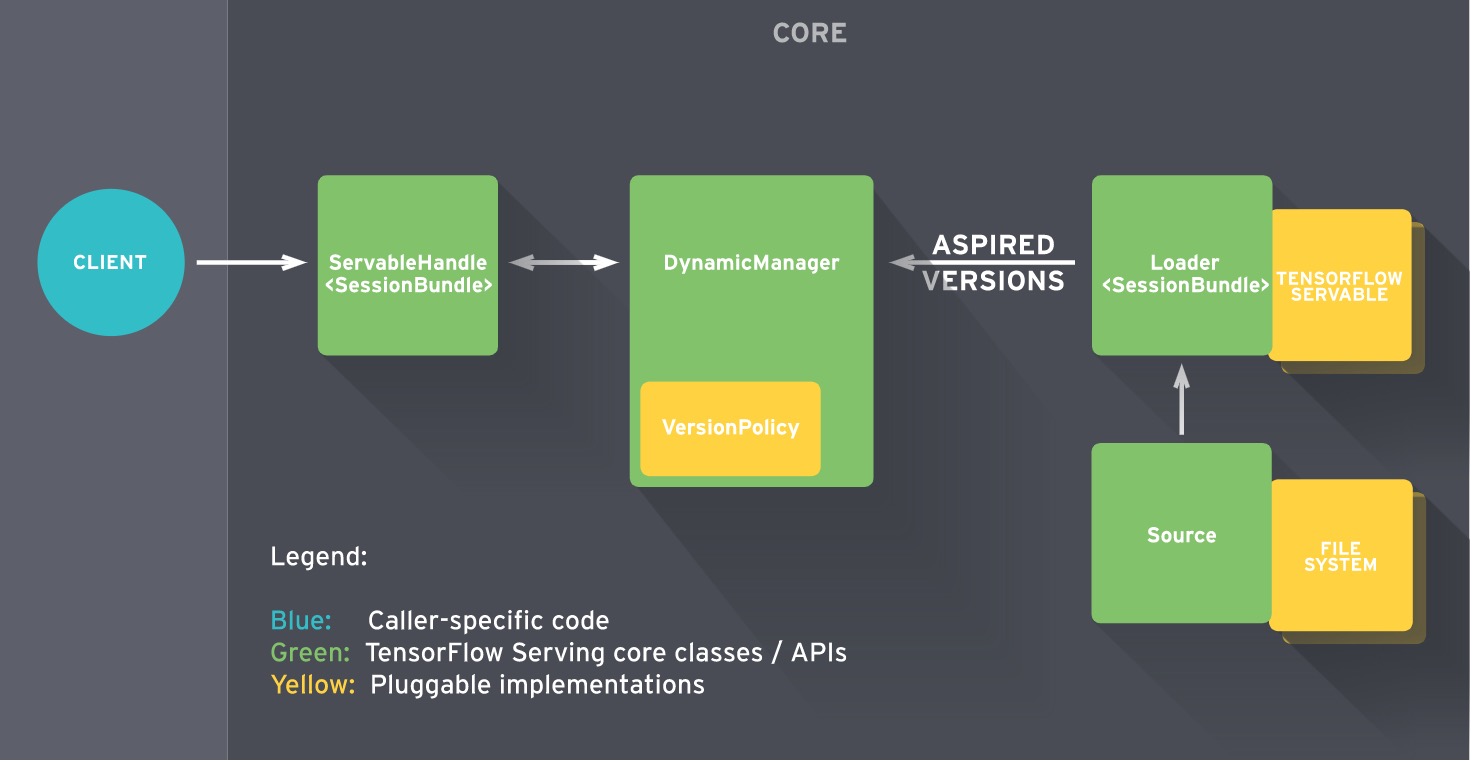

下面是TensorFlow Serving的架构图:

关于TensorFlow Serving的更多基础概念等知识,请看官方文档,翻译的再好也不如原文写的好。

这里,我总结了下面一些知识点,我认为是比较重要的:

- TensorFlow Serving通过Model Version Policy来配置多个模型的多个版本同时serving;

- 默认只加载model的latest version;

- 支持基于文件系统的模型自动发现和加载;

- 请求处理延迟低;

- 无状态,支持横向扩展;

- 可以使用A/B测试不同Version Model;

- 支持从本地文件系统扫描和加载TensorFlow模型;

- 支持从HDFS扫描和加载TensorFlow模型;

- 提供了用于client调用的gRPC接口;

TensorFlow Serving配置

当我翻遍整个TensorFlow Serving的官方文档,我还是没找到一个完整的model config是怎么配置的,很沮丧。没办法,发展太快了,文档跟不上太正常,只能撸代码了。

在model_servers的main方法中,我们看到tensorflow_model_server的完整配置项及说明如下:

tensorflow_serving/model_servers/main.cc#L314 int main(int argc, char** argv) { ... std::vector<tensorflow::Flag> flag_list = { tensorflow::Flag("port", &port, "port to listen on"), tensorflow::Flag("enable_batching", &enable_batching, "enable batching"), tensorflow::Flag("batching_parameters_file", &batching_parameters_file, "If non-empty, read an ascii BatchingParameters " "protobuf from the supplied file name and use the " "contained values instead of the defaults."), tensorflow::Flag("model_config_file", &model_config_file, "If non-empty, read an ascii ModelServerConfig " "protobuf from the supplied file name, and serve the " "models in that file. This config file can be used to " "specify multiple models to serve and other advanced " "parameters including non-default version policy. (If " "used, --model_name, --model_base_path are ignored.)"), tensorflow::Flag("model_name", &model_name, "name of model (ignored " "if --model_config_file flag is set"), tensorflow::Flag("model_base_path", &model_base_path, "path to export (ignored if --model_config_file flag " "is set, otherwise required)"), tensorflow::Flag("file_system_poll_wait_seconds", &file_system_poll_wait_seconds, "interval in seconds between each poll of the file " "system for new model version"), tensorflow::Flag("tensorflow_session_parallelism", &tensorflow_session_parallelism, "Number of threads to use for running a " "Tensorflow session. Auto-configured by default." "Note that this option is ignored if " "--platform_config_file is non-empty."), tensorflow::Flag("platform_config_file", &platform_config_file, "If non-empty, read an ascii PlatformConfigMap protobuf " "from the supplied file name, and use that platform " "config instead of the Tensorflow platform. (If used, " "--enable_batching is ignored.)")}; ... }

因此,我们看到关于model version config的配置,全部在--model_config_file中进行配置,下面是model config的完整结构:

tensorflow_serving/config/model_server_config.proto#L55 // Common configuration for loading a model being served. message ModelConfig { // Name of the model. string name = 1; // Base path to the model, excluding the version directory. // E.g> for a model at /foo/bar/my_model/123, where 123 is the version, the // base path is /foo/bar/my_model. // // (This can be changed once a model is in serving, *if* the underlying data // remains the same. Otherwise there are no guarantees about whether the old // or new data will be used for model versions currently loaded.) string base_path = 2; // Type of model. // TODO(b/31336131): DEPRECATED. Please use 'model_platform' instead. ModelType model_type = 3 [deprecated = true]; // Type of model (e.g. "tensorflow"). // // (This cannot be changed once a model is in serving.) string model_platform = 4; reserved 5; // Version policy for the model indicating how many versions of the model to // be served at the same time. // The default option is to serve only the latest version of the model. // // (This can be changed once a model is in serving.) FileSystemStoragePathSourceConfig.ServableVersionPolicy model_version_policy = 7; // Configures logging requests and responses, to the model. // // (This can be changed once a model is in serving.) LoggingConfig logging_config = 6; }

我们看到了model_version_policy,那便是我们要找的配置,它的定义如下:

tensorflow_serving/sources/storage_path/file_system_storage_path_source.proto message ServableVersionPolicy { // Serve the latest versions (i.e. the ones with the highest version // numbers), among those found on disk. // // This is the default policy, with the default number of versions as 1. message Latest { // Number of latest versions to serve. (The default is 1.) uint32 num_versions = 1; } // Serve all versions found on disk. message All { } // Serve a specific version (or set of versions). // // This policy is useful for rolling back to a specific version, or for // canarying a specific version while still serving a separate stable // version. message Specific { // The version numbers to serve. repeated int64 versions = 1; } }

因此model_version_policy目前支持三种选项:

- all: {} 表示加载所有发现的model;

- latest: { num_versions: n } 表示只加载最新的那n个model,也是默认选项;

- specific: { versions: m } 表示只加载指定versions的model,通常用来测试;

因此,通过tensorflow_model_server —port=9000 —model_config_file=<file>启动时,一个完整的model_config_file格式可参考如下:

model_config_list: { config: { name: "mnist", base_path: "/tmp/monitored/_model",mnist model_platform: "tensorflow", model_version_policy: { all: {} } }, config: { name: "inception", base_path: "/tmp/monitored/inception_model", model_platform: "tensorflow", model_version_policy: { latest: { num_versions: 2 } } }, config: { name: "mxnet", base_path: "/tmp/monitored/mxnet_model", model_platform: "tensorflow", model_version_policy: { specific: { versions: 1 } } } }

TensorFlow Serving编译

其实TensorFlow Serving的编译安装,在github setup文档中已经写的比较清楚了,在这里我只想强调一点,而且是非常重要的一点,就是文档中提到的:

Optimized build It's possible to compile using some platform specific instruction sets (e.g. AVX) that can significantly improve performance. Wherever you see 'bazel build' in the documentation, you can add the flags -c opt --copt=-msse4.1 --copt=-msse4.2 --copt=-mavx --copt=-mavx2 --copt=-mfma --copt=-O3 (or some subset of these flags). For example: bazel build -c opt --copt=-msse4.1 --copt=-msse4.2 --copt=-mavx --copt=-mavx2 --copt=-mfma --copt=-O3 tensorflow_serving/... Note: These instruction sets are not available on all machines, especially with older processors, so it may not work with all flags. You can try some subset of them, or revert to just the basic '-c opt' which is guaranteed to work on all machines.

这很重要,开始的时候我们并没有加上对应的copt选项进行编译,测试发现这样编译出来的tensorflow_model_server的性能是很差的(至少不能满足我们的要求),client并发请求tensorflow serving的延迟很高(基本上所有请求延迟都大于100ms)。加上这些copt选项时,对同样的model进行同样并发测试,结果99.987%的延迟都在50ms以内,对比悬殊。

关于使用--copt=O2还是O3及其含义,请看gcc optimizers的说明,这里不作讨论。(因为我也不懂...)

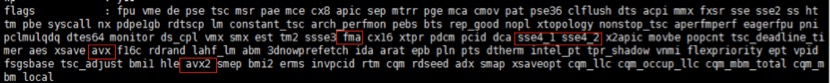

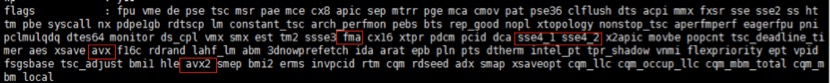

那么,是不是都是按照官方给出的一模一样的copt选项进行编译呢?答案是否定的!这取决于你运行TensorFlow Serving的服务器的cpu配置,通过查看/proc/cpuinfo可知道你该用的编译copt配置项:

使用注意事项

- 由于TensorFlow支持同时serve多个model的多个版本,因此建议client在gRPC调用时尽量指明想调用的model和version,因为不同的version对应的model不同,得到的预测值也可能大不相同。

- 将训练好的模型复制导入到model base path时,尽量先压缩成tar包,复制到base path后再解压。因为模型很大,复制过程需要耗费一些时间,这可能会导致导出的模型文件已复制,但相应的meta文件还没复制,此时如果TensorFlow Serving开始加载这个模型,并且无法检测到meta文件,那么服务器将无法成功加载该模型,并且会停止尝试再次加载该版本。

- 如果你使用的

protobuf version <= 3.2.0,那么请注意TensorFlow Serving只能加载不超过64MB大小的model。可以通过命令 pip list | grep proto查看到probtobuf version。我的环境是使用3.5.0 post1,不存在这个问题,请你留意。更多请查看issue 582。 - 官方宣称支持通过gRPC接口动态更改model_config_list,但实际上你需要开发custom resource才行,意味着不是开箱即用的。可持续关注issue 380。

TensorFlow Serving on Kubernetes

将TensorFlow Serving以Deployment方式部署到Kubernetes中,下面是对应的Deployment yaml:

apiVersion: extensions/v1beta1 kind: Deployment metadata: name: tensorflow-serving spec: replicas: 1 template: metadata: labels: app: "tensorflow-serving" spec: restartPolicy: Always imagePullSecrets: - name: harborsecret containers: - name: tensorflow-serving image: registry.vivo.xyz:4443/bigdata_release/tensorflow_serving1.3.0:v0.5 command: ["/bin/sh", "-c","export CLASSPATH=.:/usr/lib/jvm/java-1.8.0/lib/tools.jar:$(/usr/lib/hadoop-2.6.1/bin/hadoop classpath --glob); /root/tensorflow_model_server --port=8900 --model_name=test_model --model_base_path=hdfs://xx.xx.xx.xx:zz/data/serving_model"] ports: - containerPort: 8900

总结

TensorFlow Serving真的是太棒了,让你轻松的serve你的模型,还提供了基于文件系统的模型自动发现,多种模型加载策略,支持A/B测试等等特性。把它部署在Kubernetes中是那么容易,更是让人欢喜。目前我们已经在TaaS平台中提供TensorFlow Serving服务的自助申请,用户可以很方便的创建一个配置自定义的TensorFlow Serving实例供client调用了,后续将完善TensorFlow Serving的负载均衡、弹性伸缩、实例自动创建等。欢迎有兴趣的同学一起交流。