业务需求(使用背景):

- 实现搜索引擎前缀搜索功能(中文,拼音前缀查询及简拼前缀查询功能)

- 实现摘要全文检索功能,及标题加权处理功能(按照标题权值高内容权值相对低的权值分配规则,按照索引的相关性进行排序,列出前20条相关性最高的文章)

一、搜索引擎前缀搜索功能:

中文搜索:

1、搜索“刘”,匹配到“刘德华”、“刘斌”、“刘德志”

2、搜索“刘德”,匹配到“刘德华”、“刘德志”

3、搜索“德华”,匹配到“刘德华”

小结:搜索的文字需要匹配到集合中所有名字的子集。

全拼搜索:

1、搜索“li”,匹配到“刘德华”、“刘斌”、“刘德志”

2、搜索“liud”,匹配到“刘德华”、“刘德”

3、搜索“liudeh”,匹配到“刘德华”

小结:搜索的文字转换成拼音后,需要匹配到集合中所有名字转成拼音后的子集

简拼搜索:

1、搜索“w”,匹配到“我是中国人”,“我爱我的祖国”

2、搜索“wszg”,匹配到“我是中国人”

小结:搜索的文字取拼音首字母进行组合,需要匹配到组合字符串中前缀匹配的子集

解决方案:

方案一:将“like”搜索的字段的中、英简拼、英全拼 分别用索引的三个字段来进行存储并且不进行分词,最简单直接(倒排索引存储它们本身数据),检索索引数据的时候进行 通配符查询(like查询),从这三个字段中分别进行搜索,查询匹配的记录然后返回。(优势:存储格式简单,倒排索引存储的数据量最少。缺点:like索引数据的时候开销比较大 prefix 查询比 term 查询开销大得多)

方案二:将中、中简拼、中全拼 用一个字段衍生出三个字段(multi-field)来存储三种数据,并且分词器filter采用edge_ngram类型对分词的数据进行,然后处理存储到倒排索引中,当检索索引数据时,检索所有字段的数据。(优势:格式紧凑,检索索引数据的时候采用term 全匹配规则,也无需对入参进行分词,查询效率高。缺点:采用以空间换时间的策略,但是对索引来说可以接受。采用衍生字段来存储,增加了存储及检索的复杂度,对于三个字段搜索会将相关度相加,容易混淆查询相关度结果)

方案三:将索引数据存储在一个不需分词的字段中(keyword), 生成倒排索引时进行三种类型倒排索引的生成,倒排索引生成的时候采用edge_ngram 对倒排进一步拆分,以满足业务场景需求,检索时不对入参进行分词。(优势:索引数据存储简单,,检索索引数据的时只需对一个字段 采用term 全匹配查询规则,查询效率极高。缺点:采用以空间换时间的策略——比方案二要少,对索引数据来说可以接受。)

ES 针对这一业务场景解决方案还有很多种,先列出比较典型的这三种方案,选择方案三来进行处理。

准备工作:

- pinyin分词插件安装及参数解读

- ElasticSearch edge_ngram 使用

- ElasticSearch multi-field 使用

- ElasticSearch 多种查询特性熟悉

代码:

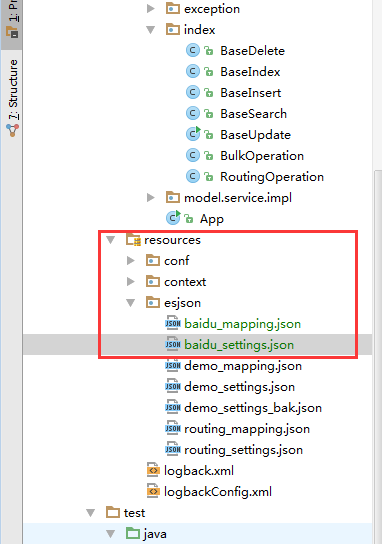

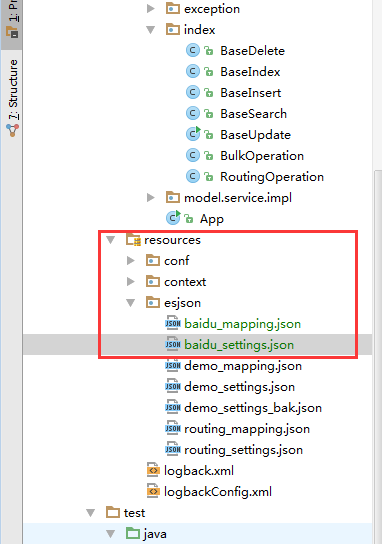

baidu_settings.json:

{ "refresh_interval":"2s", "number_of_replicas":1, "number_of_shards":2, "analysis":{ "filter":{ "autocomplete_filter":{ "type":"edge_ngram", "min_gram":1, "max_gram":15 }, "pinyin_first_letter_and_full_pinyin_filter" : { "type" : "pinyin", "keep_first_letter" : true, "keep_full_pinyin" : false, "keep_joined_full_pinyin": true, "keep_none_chinese" : false, "keep_original" : false, "limit_first_letter_length" : 16, "lowercase" : true, "trim_whitespace" : true, "keep_none_chinese_in_first_letter" : true }, "full_pinyin_filter" : { "type" : "pinyin", "keep_first_letter" : true, "keep_full_pinyin" : false, "keep_joined_full_pinyin": true, "keep_none_chinese" : false, "keep_original" : true, "limit_first_letter_length" : 16, "lowercase" : true, "trim_whitespace" : true, "keep_none_chinese_in_first_letter" : true } }, "analyzer":{ "full_prefix_analyzer":{ "type":"custom", "char_filter": [ "html_strip" ], "tokenizer":"keyword", "filter":[ "lowercase", "full_pinyin_filter", "autocomplete_filter" ] }, "chinese_analyzer":{ "type":"custom", "char_filter": [ "html_strip" ], "tokenizer":"keyword", "filter":[ "lowercase", "autocomplete_filter" ] }, "pinyin_analyzer":{ "type":"custom", "char_filter": [ "html_strip" ], "tokenizer":"keyword", "filter":[ "pinyin_first_letter_and_full_pinyin_filter", "autocomplete_filter" ] } } } }

baidu_mapping.json

{ "baidu_type": { "properties": { "full_name": { "type": "text", "analyzer": "full_prefix_analyzer" }, "age": { "type": "integer" } } } }

public class PrefixTest { @Test public void testCreateIndex() throws Exception{ TransportClient client = ESConnect.getInstance().getTransportClient(); //定义索引 BaseIndex.createWithSetting(client,"baidu_index","esjson/baidu_settings.json"); //定义类型及字段详细设计 BaseIndex.createMapping(client,"baidu_index","baidu_type","esjson/baidu_mapping.json"); } @Test public void testBulkInsert() throws Exception{ TransportClient client = ESConnect.getInstance().getTransportClient(); List<Object> list = new ArrayList<>(); list.add(new BulkInsert(12l,"我们都有一个家名字叫中国",12)); list.add(new BulkInsert(13l,"兄弟姐妹都很多景色也不错 ",13)); list.add(new BulkInsert(14l,"家里盘着两条龙是长江与黄河",14)); list.add(new BulkInsert(15l,"还有珠穆朗玛峰儿是最高山坡",15)); list.add(new BulkInsert(16l,"我们都有一个家名字叫中国",16)); list.add(new BulkInsert(17l,"兄弟姐妹都很多景色也不错",17)); list.add(new BulkInsert(18l,"看那一条长城万里在云中穿梭",18)); boolean flag = BulkOperation.batchInsert(client,"baidu_index","baidu_type",list); System.out.println(flag); } }

不要意思,代码封装了,java生成索引网上查方式即可:重点不在java代码怎么实现。而是上面的思想。

接下来查看下定义的分词器效果:

http://192.168.20.114:9200/baidu_index/_analyze?text=刘德华AT2016&analyzer=full_prefix_analyzer

{ "tokens": [ { "token": "刘", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "刘德", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "刘德华", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "刘德华a", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "刘德华at", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "刘德华at2", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "刘德华at20", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "刘德华at201", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "刘德华at2016", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "l", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "li", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "liu", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "liud", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "liude", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "liudeh", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "liudehu", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "liudehua", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "l", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "ld", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "ldh", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "ldha", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "ldhat", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "ldhat2", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "ldhat20", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "ldhat201", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 }, { "token": "ldhat2016", "start_offset": 0, "end_offset": 9, "type": "word", "position": 0 } ] }

大功告成。

参考:

http://blog.csdn.net/napoay/article/details/53907921

https://elasticsearch.cn/question/407

http://blog.csdn.net/xifeijian/article/details/51095762

http://www.cnblogs.com/xing901022/p/5910139.html

http://www.cnblogs.com/clonen/p/6674492.html

全文检索后续有时间再进行整理。