上一节我们已经在ubuntu中安装好了hadoop集群,这一篇就在搭建好的hadoop中运行一个国际惯例wordcount程序,

- 一是可以验证我们hadoop集群是否真的搭建成功

- 二是顺便熟悉一下hadoop程序的运行流程

1.在本地 创建一个wordcount文件

root@master:~# vim wordcount.txt root@master:~# cat wordcount.txt hello world hello

2.将这个文件上传到hdfs中

root@master:~# hadoop dfs -put ./wordcount.txt /firstTestDir DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. root@master:~# hadoop dfs -ls / DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. Found 2 items drwxr-xr-x - root supergroup 0 2018-05-14 10:03 /firstTestDir drwx------ - root supergroup 0 2018-05-14 09:42 /tmp root@master:~# hadoop dfs -ls /firstTestDir DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. Found 2 items -rw-r--r-- 1 root supergroup 22002 2018-05-13 21:14 /firstTestDir/a.txt -rw-r--r-- 1 root supergroup 19 2018-05-14 10:03 /firstTestDir/wordcount.txt

3.运行wordcount

3.1 hadoop中已经为我们提供了一些example案例在/hadoop-2.6.5/share/hadoop/mapreduce下

root@master:~# cd /opt/hadoop-2.6.5/share/hadoop/mapreduce root@master:/opt/hadoop-2.6.5/share/hadoop/mapreduce# l hadoop-mapreduce-client-app-2.6.5.jar hadoop-mapreduce-client-hs-2.6.5.jar hadoop-mapreduce-client-jobclient-2.6.5-tests.jar lib/ hadoop-mapreduce-client-common-2.6.5.jar hadoop-mapreduce-client-hs-plugins-2.6.5.jar hadoop-mapreduce-client-shuffle-2.6.5.jar lib-examples/ hadoop-mapreduce-client-core-2.6.5.jar hadoop-mapreduce-client-jobclient-2.6.5.jar hadoop-mapreduce-examples-2.6.5.jar sources/

我们用到是其中的hadoop-mapreduce-examples-2.6.5.jar包

3.2 运行

格式是: hadoop jar jar包路径 运行的app名称(可自己定义) 文件输入地址 文件输出地址

root@master:/opt/hadoop-2.6.5/share/hadoop/mapreduce# hadoop jar hadoop-mapreduce-examples-2.6.5.jar wordcount /firstTestDir/wordcount.txt /output/ 18/05/14 10:04:10 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.122.10:8032 18/05/14 10:04:11 INFO input.FileInputFormat: Total input paths to process : 1 18/05/14 10:04:11 INFO mapreduce.JobSubmitter: number of splits:1 18/05/14 10:04:11 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1526216753716_0004 18/05/14 10:04:11 INFO impl.YarnClientImpl: Submitted application application_1526216753716_0004 18/05/14 10:04:11 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1526216753716_0004/ 18/05/14 10:04:11 INFO mapreduce.Job: Running job: job_1526216753716_0004 18/05/14 10:04:18 INFO mapreduce.Job: Job job_1526216753716_0004 running in uber mode : false 18/05/14 10:04:18 INFO mapreduce.Job: map 0% reduce 0% 18/05/14 10:04:22 INFO mapreduce.Job: map 100% reduce 0% 18/05/14 10:04:27 INFO mapreduce.Job: map 100% reduce 100% 18/05/14 10:04:28 INFO mapreduce.Job: Job job_1526216753716_0004 completed successfully 18/05/14 10:04:28 INFO mapreduce.Job: Counters: 49 File System Counters FILE: Number of bytes read=30 FILE: Number of bytes written=214671 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=129 HDFS: Number of bytes written=16 HDFS: Number of read operations=6 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Job Counters Launched map tasks=1 Launched reduce tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=2386 Total time spent by all reduces in occupied slots (ms)=2509 Total time spent by all map tasks (ms)=2386 Total time spent by all reduce tasks (ms)=2509 Total vcore-milliseconds taken by all map tasks=2386 Total vcore-milliseconds taken by all reduce tasks=2509 Total megabyte-milliseconds taken by all map tasks=2443264 Total megabyte-milliseconds taken by all reduce tasks=2569216 Map-Reduce Framework Map input records=3 Map output records=3 Map output bytes=30 Map output materialized bytes=30 Input split bytes=110 Combine input records=3 Combine output records=2 Reduce input groups=2 Reduce shuffle bytes=30 Reduce input records=2 Reduce output records=2 Spilled Records=4 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=143 CPU time spent (ms)=1680 Physical memory (bytes) snapshot=433512448 Virtual memory (bytes) snapshot=3938676736 Total committed heap usage (bytes)=322961408 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=19 File Output Format Counters Bytes Written=16

OK!

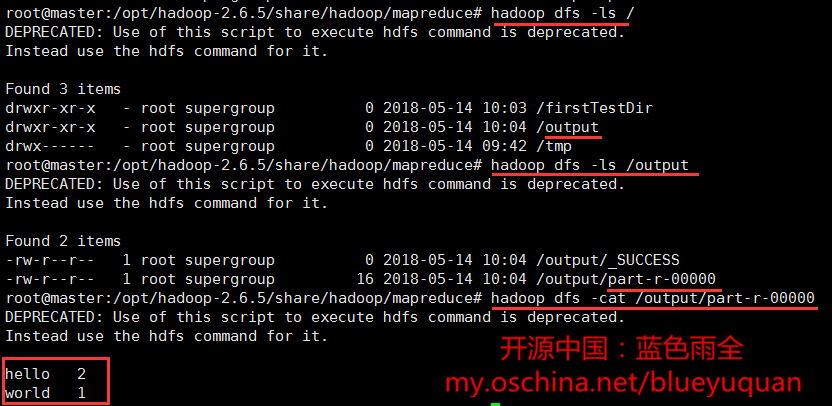

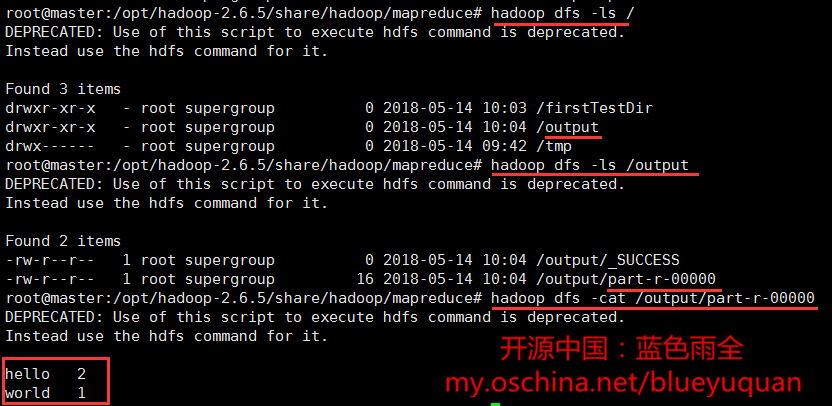

3.3 验证

root@master:/opt/hadoop-2.6.5/share/hadoop/mapreduce# hadoop dfs -ls / DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. Found 3 items drwxr-xr-x - root supergroup 0 2018-05-14 10:03 /firstTestDir drwxr-xr-x - root supergroup 0 2018-05-14 10:04 /output #多了一个output目录 drwx------ - root supergroup 0 2018-05-14 09:42 /tmp root@master:/opt/hadoop-2.6.5/share/hadoop/mapreduce# hadoop dfs -ls /output DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. Found 2 items -rw-r--r-- 1 root supergroup 0 2018-05-14 10:04 /output/_SUCCESS -rw-r--r-- 1 root supergroup 16 2018-05-14 10:04 /output/part-r-00000 #这个文件里保存了最终的运行结果 root@master:/opt/hadoop-2.6.5/share/hadoop/mapreduce# hadoop dfs -cat /output/part-r-00000 DEPRECATED: Use of this script to execute hdfs command is deprecated. Instead use the hdfs command for it. hello 2 #OK! world 1

可以看到,到这里就运行成功了!

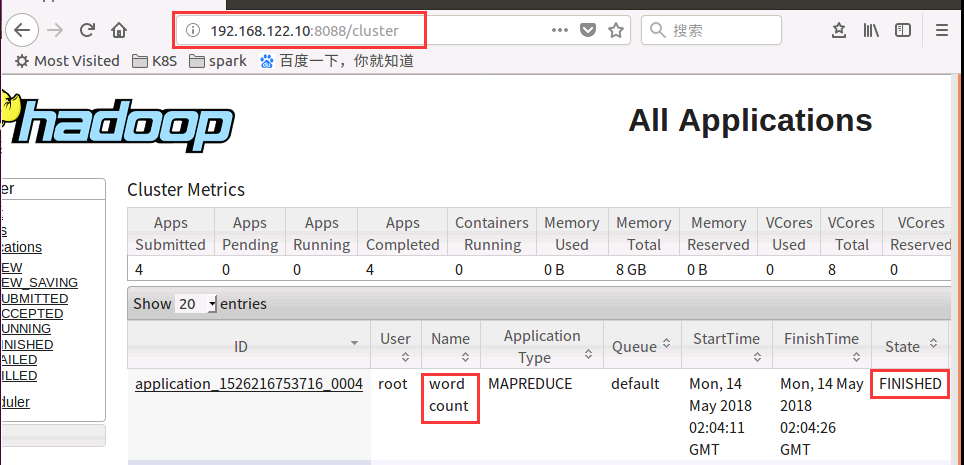

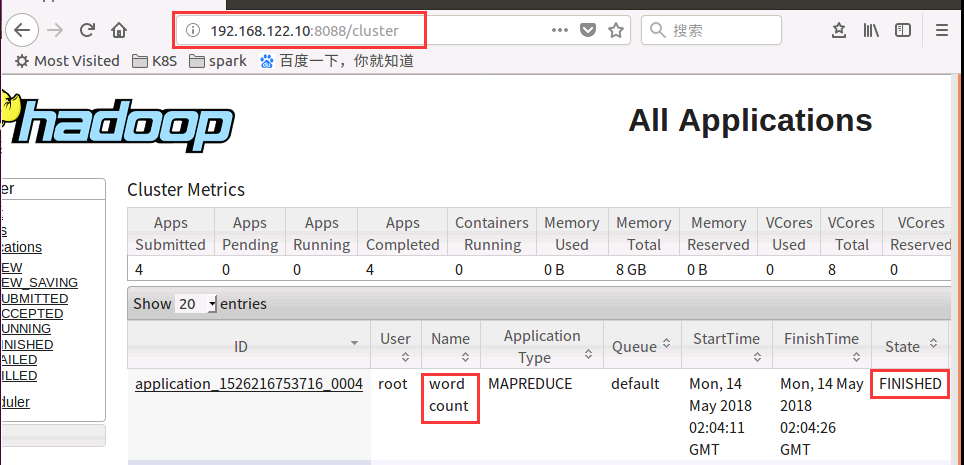

4.运行时图

![在这里输入图片标题 ![输入图片说明]](https://static.oschina.net/uploads/img/201805/14102425_opby.png)

下面是hadoop 的UI展示结果

![在这里输入图片标题 ![输入图片说明]](https://static.oschina.net/uploads/img/201805/14102425_opby.png)