概述

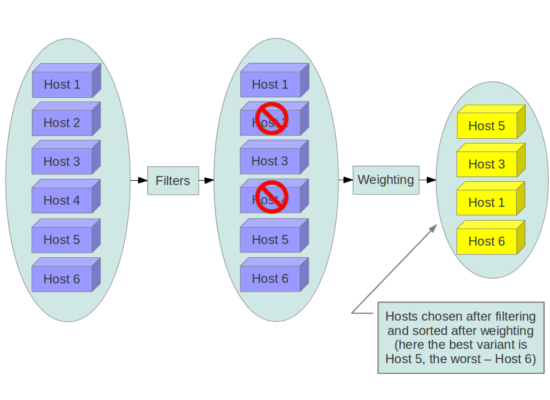

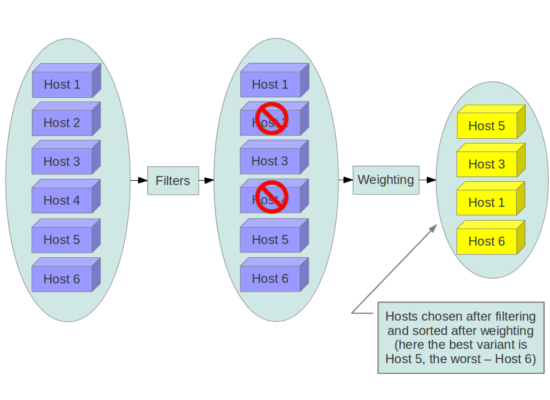

在创建一个新虚拟机实例时,Nova Scheduler通过配置好的Filter Scheduler对所有计算节点进行过滤(filtering)和称重(weighting),最后根据称重高低和用户请求节点个数返回可用主机列表。如果失败,则表明没有可用的主机。

###标准过滤器

-

AllHostsFilter - 不进行过滤,所有可见的主机都会通过。

-

ImagePropertiesFilter - 根据镜像元数据进行过滤。

-

AvailabilityZoneFilter - 根据可用区域进行过滤(Availability Zone元数据)。

-

ComputeCapabilitiesFilter - 根据计算能力进行过滤,通过请求创建虚拟机时指定的参数与主机的属性和状态进行匹配来确定是否通过,可用的操作符如下:

* = (equal to or greater than as a number; same as vcpus case) * == (equal to as a number) * != (not equal to as a number) * >= (greater than or equal to as a number) * <= (less than or equal to as a number) * s== (equal to as a string) * s!= (not equal to as a string) * s>= (greater than or equal to as a string) * s> (greater than as a string) * s<= (less than or equal to as a string) * s< (less than as a string) * <in> (substring) * <all-in> (all elements contained in collection) * <or> (find one of these) Examples are: ">= 5", "s== 2.1.0", "<in> gcc", "<all-in> aes mmx", and "<or> fpu <or> gpu"

部分可用的属性:

* free_ram_mb (compared with a number, values like ">= 4096") * free_disk_mb (compared with a number, values like ">= 10240") * host (compared with a string, values like: "<in> compute","s== compute_01") * hypervisor_type (compared with a string, values like: "s== QEMU", "s== powervm") * hypervisor_version (compared with a number, values like : ">= 1005003", "== 2000000") * num_instances (compared with a number, values like: "<= 10") * num_io_ops (compared with a number, values like: "<= 5") * vcpus_total (compared with a number, values like: "= 48", ">=24") * vcpus_used (compared with a number, values like: "= 0", "<= 10")

-

AggregateInstanceExtraSpecsFilter - 根据额外的主机属性进行过滤(Host Aggregate元数据),与ComputeCapabilitiesFilter类似。

-

ComputeFilter - 根据主机的状态和服务的可用性过滤。

-

CoreFilter AggregateCoreFilter - 根据剩余可用的CPU个数进行过滤。

-

IsolatedHostsFilter - 根据nova.conf中的image_isolated、 host_isolated,和restrict_isolated_hosts_to_isolated_images 标志进行过滤,用于节点隔离。

-

JsonFilter - 根据JSON语句来过滤。

-

RamFilter AggregateRamFilter - 根据内存来过滤。

-

DiskFilter AggregateDiskFilter - 根据磁盘空间来过滤。

-

NumInstancesFilter AggregateNumInstancesFilter - 根据节点实例个数来过滤。

-

IoOpsFilter AggregateIoOpsFilter - 根据IO状况过滤。

-

PciPassthroughFilter - 根据请求的PCI设备进行过滤。

-

SimpleCIDRAffinityFilter - 在同一个IP子网上创建虚拟机。

-

SameHostFilter - 在与一个实例相同的主机上启动实例。

-

RetryFilter - 过滤掉已经尝试过的主机。

-

AggregateTypeAffinityFilter - 限定一个Aggregate中创建的实例类型(Flavor类型)。

-

ServerGroupAntiAffinityFilter - 尽量把实例部署在不同主机。

-

ServerGroupAffinityFilter - 尽量把实例部署在相同主机。

-

AggregateMultiTenancyIsolation - 把租户隔离在指定的Aggregate。

-

AggregateImagePropertiesIsolation - 根据镜像属性和Aggregate属性隔离主机。

-

MetricsFilter - 根据weight_setting 过滤主机,只有具备可用测量值的主机被通过。

-

NUMATopologyFilter - 根据实例的NUMA要求过滤主机。

###权重计算

当过滤后如果有多个主机,则需要进行权重计算,最后选出权重最高的主机,公式如下:

weight = w1_multiplier * norm(w1) + w2_multiplier * norm(w2) + ...

每一项都由“权重系数”(wN_multiplier)乘以“称重值”(norm(wN)),“权重系数”通过配置文件获取,“称重值”由“称重对象”(Weight Object)动态生成,目前可用的“称重对象”主要有:RAMWeigher,DiskWeigher,MetricsWeigher,IoOpsWeigher,PCIWeigher,ServerGroupSoftAffinityWeigher和ServerGroupSoftAntiAffinityWeigher。

###常见策略

根据不同的需求,可以制定出不同的调度策略,使用调度插件进行组合,以满足需求。下面是一些常见的调度策略:

-

Packing: 虚拟机尽量放置在含有虚拟机数量最多的主机上。

-

Stripping: 虚拟机尽量放置在含有虚拟机数量最少的主机上。

-

CPU load balance:虚拟机尽量放在可用core最多的主机上。

-

Memory load balance:虚拟机尽量放在可用memory 最多的主机上。

-

Affinity : 多个虚拟机需要放置在相同的主机上。

-

AntiAffinity: 多个虚拟机需要放在在不同的主机上。

-

CPU Utilization load balance:虚拟机尽量放在CPU利用率最低的主机上。

##元数据

###调度测试

由于各种元数据过滤方法都大同小异,而Flavor元数据没有太多预定义的值,处理比较自由,因此这里以Flavor元数据过滤器进行测试。

####过滤器配置

新增AggregateInstanceExtraSpecsFilter过滤器:

$ vi /etc/kolla/nova-scheduler/nova.conf [DEFAULT] ... scheduler_default_filters = AggregateInstanceExtraSpecsFilter, RetryFilter, RamFilter, DiskFilter, ComputeFilter, ComputeCapabilitiesFilter, ImagePropertiesFilter, ServerGroupAntiAffinityFilter, ServerGroupAffinityFilter ... $ docker restart nova_scheduler

####集合配置

$ nova aggregate-create io-fast +----+---------+-------------------+-------+----------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+---------+-------------------+-------+----------+--------------------------------------+ | 8 | io-fast | - | | | 2523c96a-46ee-4fac-ba8a-5b50a4d1ebbd | +----+---------+-------------------+-------+----------+--------------------------------------+ $ nova aggregate-set-metadata io-fast io=fast Metadata has been successfully updated for aggregate 8. +----+---------+-------------------+-------+-----------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+---------+-------------------+-------+-----------+--------------------------------------+ | 8 | io-fast | - | | 'io=fast' | 2523c96a-46ee-4fac-ba8a-5b50a4d1ebbd | +----+---------+-------------------+-------+-----------+--------------------------------------+ $ nova aggregate-add-host io-fast osdev-01 Host osdev-01 has been successfully added for aggregate 8 +----+---------+-------------------+------------+-----------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+---------+-------------------+------------+-----------+--------------------------------------+ | 8 | io-fast | - | 'osdev-01' | 'io=fast' | 2523c96a-46ee-4fac-ba8a-5b50a4d1ebbd | +----+---------+-------------------+------------+-----------+--------------------------------------+ $ nova aggregate-add-host io-fast osdev-02 Host osdev-02 has been successfully added for aggregate 8 +----+---------+-------------------+------------------------+-----------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+---------+-------------------+------------------------+-----------+--------------------------------------+ | 8 | io-fast | - | 'osdev-01', 'osdev-02' | 'io=fast' | 2523c96a-46ee-4fac-ba8a-5b50a4d1ebbd | +----+---------+-------------------+------------------------+-----------+--------------------------------------+ $ nova aggregate-add-host io-fast osdev-03 Host osdev-03 has been successfully added for aggregate 8 +----+---------+-------------------+------------------------------------+-----------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+---------+-------------------+------------------------------------+-----------+--------------------------------------+ | 8 | io-fast | - | 'osdev-01', 'osdev-02', 'osdev-03' | 'io=fast' | 2523c96a-46ee-4fac-ba8a-5b50a4d1ebbd | +----+---------+-------------------+------------------------------------+-----------+--------------------------------------+

$ nova aggregate-create io-slow +----+---------+-------------------+-------+----------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+---------+-------------------+-------+----------+--------------------------------------+ | 9 | io-slow | - | | | d10d2eaf-43d7-464e-bc12-10f18897b476 | +----+---------+-------------------+-------+----------+--------------------------------------+ $ nova aggregate-set-metadata io-slow io=slow Metadata has been successfully updated for aggregate 9. +----+---------+-------------------+-------+-----------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+---------+-------------------+-------+-----------+--------------------------------------+ | 9 | io-slow | - | | 'io=slow' | d10d2eaf-43d7-464e-bc12-10f18897b476 | +----+---------+-------------------+-------+-----------+--------------------------------------+ $ nova aggregate-add-host io-slow osdev-gpu Host osdev-gpu has been successfully added for aggregate 9 +----+---------+-------------------+-------------+-----------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+---------+-------------------+-------------+-----------+--------------------------------------+ | 9 | io-slow | - | 'osdev-gpu' | 'io=slow' | d10d2eaf-43d7-464e-bc12-10f18897b476 | +----+---------+-------------------+-------------+-----------+--------------------------------------+ $ nova aggregate-add-host io-slow osdev-ceph Host osdev-ceph has been successfully added for aggregate 9 +----+---------+-------------------+---------------------------+-----------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+---------+-------------------+---------------------------+-----------+--------------------------------------+ | 9 | io-slow | - | 'osdev-gpu', 'osdev-ceph' | 'io=slow' | d10d2eaf-43d7-464e-bc12-10f18897b476 | +----+---------+-------------------+---------------------------+-----------+--------------------------------------+

####模板配置

$ openstack flavor create --vcpus 1 --ram 64 --disk 1 machine.fast $ nova flavor-key machine.fast set io=fast $ openstack flavor show machine.fast +----------------------------+--------------------------------------+ | Field | Value | +----------------------------+--------------------------------------+ | OS-FLV-DISABLED:disabled | False | | OS-FLV-EXT-DATA:ephemeral | 0 | | access_project_ids | None | | disk | 1 | | id | 4c8a6d15-270d-464b-bd3b-303d167af4cb | | name | machine.fast | | os-flavor-access:is_public | True | | properties | io='fast' | | ram | 64 | | rxtx_factor | 1.0 | | swap | | | vcpus | 1 | +----------------------------+--------------------------------------+

$ openstack flavor create --vcpus 1 --ram 64 --disk 1 machine.slow $ nova flavor-key machine.slow set io=slow $ openstack flavor show machine.slow +----------------------------+--------------------------------------+ | Field | Value | +----------------------------+--------------------------------------+ | OS-FLV-DISABLED:disabled | False | | OS-FLV-EXT-DATA:ephemeral | 0 | | access_project_ids | None | | disk | 1 | | id | f6a0fdad-3f20-40ed-a4fc-0ba49ff4ff02 | | name | machine.slow | | os-flavor-access:is_public | True | | properties | io='slow' | | ram | 64 | | rxtx_factor | 1.0 | | swap | | | vcpus | 1 | +----------------------------+--------------------------------------+

####创建虚拟机

$ openstack server create --image cirros --flavor machine.fast --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 server.fast1 $ openstack server create --image cirros --flavor machine.fast --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 server.fast2 $ openstack server create --image cirros --flavor machine.fast --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 server.fast3

$ openstack server create --image cirros --flavor machine.slow --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 server.slow1 $ openstack server create --image cirros --flavor machine.slow --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 server.slow2 $ openstack server create --image cirros --flavor machine.slow --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 server.slow3

$ openstack server list --long --column "Name" --column "Status" --column "Networks" --column "Availability Zone" --column "Host" +--------------------+--------+--------------------+-------------------+------------+ | Name | Status | Networks | Availability Zone | Host | +--------------------+--------+--------------------+-------------------+------------+ | server.slow3 | ACTIVE | demo-net=10.0.0.20 | az02 | osdev-gpu | | server.slow2 | ACTIVE | demo-net=10.0.0.17 | az02 | osdev-ceph | | server.slow1 | ACTIVE | demo-net=10.0.0.14 | az02 | osdev-gpu | | server.fast3 | ACTIVE | demo-net=10.0.0.13 | az01 | osdev-01 | | server.fast2 | ACTIVE | demo-net=10.0.0.16 | az01 | osdev-02 | | server.fast1 | ACTIVE | demo-net=10.0.0.15 | az02 | osdev-03 | +--------------------+--------+--------------------+-------------------+------------+

###相关源码

请求参数

命令参数

$ openstack server create usage: openstack server create [-h] [-f {json,shell,table,value,yaml}] [-c COLUMN] [--max-width <integer>] [--fit-width] [--print-empty] [--noindent] [--prefix PREFIX] (--image <image> | --volume <volume>) --flavor <flavor> [--security-group <security-group-name>] [--key-name <key-name>] [--property <key=value>] [--file <dest-filename=source-filename>] [--user-data <user-data>] [--availability-zone <zone-name>] [--block-device-mapping <dev-name=mapping>] [--nic <net-id=net-uuid,v4-fixed-ip=ip-addr,v6-fixed-ip=ip-addr,port-id=port-uuid>] [--hint <key=value>] [--config-drive <config-drive-volume>|True] [--min <count>] [--max <count>] [--wait] <server-name> openstack server create: error: too few arguments

影响调度的直接输入参数有[--availability-zone <zone-name>]和[--hint <key=value>]。

- 由Nova API生成的请求参数(nova/objects/request_spec.py ):

... @base.NovaObjectRegistry.register class RequestSpec(base.NovaObject): # Version 1.0: Initial version # Version 1.1: ImageMeta version 1.6 # Version 1.2: SchedulerRetries version 1.1 # Version 1.3: InstanceGroup version 1.10 # Version 1.4: ImageMeta version 1.7 # Version 1.5: Added get_by_instance_uuid(), create(), save() # Version 1.6: Added requested_destination # Version 1.7: Added destroy() # Version 1.8: Added security_groups VERSION = '1.8' fields = { 'id': fields.IntegerField(), 'image': fields.ObjectField('ImageMeta', nullable=True), 'numa_topology': fields.ObjectField('InstanceNUMATopology', nullable=True), 'pci_requests': fields.ObjectField('InstancePCIRequests', nullable=True), 'project_id': fields.StringField(nullable=True), 'availability_zone': fields.StringField(nullable=True), 'flavor': fields.ObjectField('Flavor', nullable=False), 'num_instances': fields.IntegerField(default=1), 'ignore_hosts': fields.ListOfStringsField(nullable=True), 'force_hosts': fields.ListOfStringsField(nullable=True), 'force_nodes': fields.ListOfStringsField(nullable=True), 'requested_destination': fields.ObjectField('Destination', nullable=True, default=None), 'retry': fields.ObjectField('SchedulerRetries', nullable=True), 'limits': fields.ObjectField('SchedulerLimits', nullable=True), 'instance_group': fields.ObjectField('InstanceGroup', nullable=True), # NOTE(sbauza): Since hints are depending on running filters, we prefer # to leave the API correctly validating the hints per the filters and # just provide to the RequestSpec object a free-form dictionary 'scheduler_hints': fields.DictOfListOfStringsField(nullable=True), 'instance_uuid': fields.UUIDField(), 'security_groups': fields.ObjectField('SecurityGroupList'), } ...

####主机状态

主机状态中的主要信息(nova/scheduler/host_manager.py):

class HostState(object): """Mutable and immutable information tracked for a host. This is an attempt to remove the ad-hoc data structures previously used and lock down access. """ def __init__(self, host, node): self.host = host self.nodename = node self._lock_name = (host, node) # Mutable available resources. # These will change as resources are virtually "consumed". self.total_usable_ram_mb = 0 self.total_usable_disk_gb = 0 self.disk_mb_used = 0 self.free_ram_mb = 0 self.free_disk_mb = 0 self.vcpus_total = 0 self.vcpus_used = 0 self.pci_stats = None self.numa_topology = None # Additional host information from the compute node stats: self.num_instances = 0 self.num_io_ops = 0 # Other information self.host_ip = None self.hypervisor_type = None self.hypervisor_version = None self.hypervisor_hostname = None self.cpu_info = None self.supported_instances = None # Resource oversubscription values for the compute host: self.limits = {} # Generic metrics from compute nodes self.metrics = None # List of aggregates the host belongs to self.aggregates = [] # Instances on this host self.instances = {} # Allocation ratios for this host self.ram_allocation_ratio = None self.cpu_allocation_ratio = None self.disk_allocation_ratio = None self.updated = None

####Flavor元数据和过滤器

Flavor元数据主要在extra_spec字段,基本都没有明确定义,可自由使用。

AggregateInstanceExtraSpecsFilter过滤器,使用aggregate_instance_extra_specs域和没有域的元数据进行判断(nova/scheduler/filters/aggregate_instance_extra_specs.py):

from oslo_log import log as logging from nova.scheduler import filters from nova.scheduler.filters import extra_specs_ops from nova.scheduler.filters import utils LOG = logging.getLogger(__name__) _SCOPE = 'aggregate_instance_extra_specs' class AggregateInstanceExtraSpecsFilter(filters.BaseHostFilter): """AggregateInstanceExtraSpecsFilter works with InstanceType records.""" # Aggregate data and instance type does not change within a request run_filter_once_per_request = True RUN_ON_REBUILD = False def host_passes(self, host_state, spec_obj): """Return a list of hosts that can create instance_type Check that the extra specs associated with the instance type match the metadata provided by aggregates. If not present return False. """ instance_type = spec_obj.flavor # If 'extra_specs' is not present or extra_specs are empty then we # need not proceed further if (not instance_type.obj_attr_is_set('extra_specs') or not instance_type.extra_specs): return True metadata = utils.aggregate_metadata_get_by_host(host_state) for key, req in instance_type.extra_specs.items(): # Either not scope format, or aggregate_instance_extra_specs scope scope = key.split(':', 1) if len(scope) > 1: if scope[0] != _SCOPE: continue else: del scope[0] key = scope[0] aggregate_vals = metadata.get(key, None) if not aggregate_vals: LOG.debug("%(host_state)s fails instance_type extra_specs " "requirements. Extra_spec %(key)s is not in aggregate.", {'host_state': host_state, 'key': key}) return False for aggregate_val in aggregate_vals: if extra_specs_ops.match(aggregate_val, req): break else: LOG.debug("%(host_state)s fails instance_type extra_specs " "requirements. '%(aggregate_vals)s' do not " "match '%(req)s'", {'host_state': host_state, 'req': req, 'aggregate_vals': aggregate_vals}) return False return True

- 元数据匹配的相关运算符(nova/scheduler/filters/extra_specs_ops.py):

import operator # 1. The following operations are supported: # =, s==, s!=, s>=, s>, s<=, s<, <in>, <all-in>, <or>, ==, !=, >=, <= # 2. Note that <or> is handled in a different way below. # 3. If the first word in the extra_specs is not one of the operators, # it is ignored. op_methods = {'=': lambda x, y: float(x) >= float(y), '<in>': lambda x, y: y in x, '<all-in>': lambda x, y: all(val in x for val in y), '==': lambda x, y: float(x) == float(y), '!=': lambda x, y: float(x) != float(y), '>=': lambda x, y: float(x) >= float(y), '<=': lambda x, y: float(x) <= float(y), 's==': operator.eq, 's!=': operator.ne, 's<': operator.lt, 's<=': operator.le, 's>': operator.gt, 's>=': operator.ge} def match(value, req): words = req.split() op = method = None if words: op = words.pop(0) method = op_methods.get(op) if op != '<or>' and not method: return value == req if value is None: return False if op == '<or>': # Ex: <or> v1 <or> v2 <or> v3 while True: if words.pop(0) == value: return True if not words: break words.pop(0) # remove a keyword <or> if not words: break return False if words: if op == '<all-in>': # requires a list not a string return method(value, words) return method(value, words[0]) return False

####Image元数据和过滤器

- Image基本属性值(nova/objects/image_meta.py):

@base.NovaObjectRegistry.register class ImageMeta(base.NovaObject): fields = { 'id': fields.UUIDField(), 'name': fields.StringField(), 'status': fields.StringField(), 'visibility': fields.StringField(), 'protected': fields.FlexibleBooleanField(), 'checksum': fields.StringField(), 'owner': fields.StringField(), 'size': fields.IntegerField(), 'virtual_size': fields.IntegerField(), 'container_format': fields.StringField(), 'disk_format': fields.StringField(), 'created_at': fields.DateTimeField(nullable=True), 'updated_at': fields.DateTimeField(nullable=True), 'tags': fields.ListOfStringsField(), 'direct_url': fields.StringField(), 'min_ram': fields.IntegerField(), 'min_disk': fields.IntegerField(), 'properties': fields.ObjectField('ImageMetaProps'), }

- Image元数据中的可用值(nova/objects/image_meta.py):

@base.NovaObjectRegistry.register class ImageMetaProps(base.NovaObject): # Version 1.0: Initial version # Version 1.1: added os_require_quiesce field # Version 1.2: added img_hv_type and img_hv_requested_version fields # Version 1.3: HVSpec version 1.1 # Version 1.4: added hw_vif_multiqueue_enabled field # Version 1.5: added os_admin_user field # Version 1.6: Added 'lxc' and 'uml' enum types to DiskBusField # Version 1.7: added img_config_drive field # Version 1.8: Added 'lxd' to hypervisor types # Version 1.9: added hw_cpu_thread_policy field # Version 1.10: added hw_cpu_realtime_mask field # Version 1.11: Added hw_firmware_type field # Version 1.12: Added properties for image signature verification # Version 1.13: added os_secure_boot field # Version 1.14: Added 'hw_pointer_model' field # Version 1.15: Added hw_rescue_bus and hw_rescue_device. # Version 1.16: WatchdogActionField supports 'disabled' enum. VERSION = '1.16' def obj_make_compatible(self, primitive, target_version): super(ImageMetaProps, self).obj_make_compatible(primitive, target_version) target_version = versionutils.convert_version_to_tuple(target_version) if target_version < (1, 16) and 'hw_watchdog_action' in primitive: # Check to see if hw_watchdog_action was set to 'disabled' and if # so, remove it since not specifying it is the same behavior. if primitive['hw_watchdog_action'] == \ fields.WatchdogAction.DISABLED: primitive.pop('hw_watchdog_action') if target_version < (1, 15): primitive.pop('hw_rescue_bus', None) primitive.pop('hw_rescue_device', None) if target_version < (1, 14): primitive.pop('hw_pointer_model', None) if target_version < (1, 13): primitive.pop('os_secure_boot', None) if target_version < (1, 11): primitive.pop('hw_firmware_type', None) if target_version < (1, 10): primitive.pop('hw_cpu_realtime_mask', None) if target_version < (1, 9): primitive.pop('hw_cpu_thread_policy', None) if target_version < (1, 7): primitive.pop('img_config_drive', None) if target_version < (1, 5): primitive.pop('os_admin_user', None) if target_version < (1, 4): primitive.pop('hw_vif_multiqueue_enabled', None) if target_version < (1, 2): primitive.pop('img_hv_type', None) primitive.pop('img_hv_requested_version', None) if target_version < (1, 1): primitive.pop('os_require_quiesce', None) if target_version < (1, 6): bus = primitive.get('hw_disk_bus', None) if bus in ('lxc', 'uml'): raise exception.ObjectActionError( action='obj_make_compatible', reason='hw_disk_bus=%s not supported in version %s' % ( bus, target_version)) # Maximum number of NUMA nodes permitted for the guest topology NUMA_NODES_MAX = 128 # 'hw_' - settings affecting the guest virtual machine hardware # 'img_' - settings affecting the use of images by the compute node # 'os_' - settings affecting the guest operating system setup fields = { # name of guest hardware architecture eg i686, x86_64, ppc64 'hw_architecture': fields.ArchitectureField(), # used to decide to expand root disk partition and fs to full size of # root disk 'hw_auto_disk_config': fields.StringField(), # whether to display BIOS boot device menu 'hw_boot_menu': fields.FlexibleBooleanField(), # name of the CDROM bus to use eg virtio, scsi, ide 'hw_cdrom_bus': fields.DiskBusField(), # preferred number of CPU cores per socket 'hw_cpu_cores': fields.IntegerField(), # preferred number of CPU sockets 'hw_cpu_sockets': fields.IntegerField(), # maximum number of CPU cores per socket 'hw_cpu_max_cores': fields.IntegerField(), # maximum number of CPU sockets 'hw_cpu_max_sockets': fields.IntegerField(), # maximum number of CPU threads per core 'hw_cpu_max_threads': fields.IntegerField(), # CPU allocation policy 'hw_cpu_policy': fields.CPUAllocationPolicyField(), # CPU thread allocation policy 'hw_cpu_thread_policy': fields.CPUThreadAllocationPolicyField(), # CPU mask indicates which vCPUs will have realtime enable, # example ^0-1 means that all vCPUs except 0 and 1 will have a # realtime policy. 'hw_cpu_realtime_mask': fields.StringField(), # preferred number of CPU threads per core 'hw_cpu_threads': fields.IntegerField(), # guest ABI version for guest xentools either 1 or 2 (or 3 - depends on # Citrix PV tools version installed in image) 'hw_device_id': fields.IntegerField(), # name of the hard disk bus to use eg virtio, scsi, ide 'hw_disk_bus': fields.DiskBusField(), # allocation mode eg 'preallocated' 'hw_disk_type': fields.StringField(), # name of the floppy disk bus to use eg fd, scsi, ide 'hw_floppy_bus': fields.DiskBusField(), # This indicates the guest needs UEFI firmware 'hw_firmware_type': fields.FirmwareTypeField(), # boolean - used to trigger code to inject networking when booting a CD # image with a network boot image 'hw_ipxe_boot': fields.FlexibleBooleanField(), # There are sooooooooooo many possible machine types in # QEMU - several new ones with each new release - that it # is not practical to enumerate them all. So we use a free # form string 'hw_machine_type': fields.StringField(), # One of the magic strings 'small', 'any', 'large' # or an explicit page size in KB (eg 4, 2048, ...) 'hw_mem_page_size': fields.StringField(), # Number of guest NUMA nodes 'hw_numa_nodes': fields.IntegerField(), # Each list entry corresponds to a guest NUMA node and the # set members indicate CPUs for that node 'hw_numa_cpus': fields.ListOfSetsOfIntegersField(), # Each list entry corresponds to a guest NUMA node and the # list value indicates the memory size of that node. 'hw_numa_mem': fields.ListOfIntegersField(), # Generic property to specify the pointer model type. 'hw_pointer_model': fields.PointerModelField(), # boolean 'yes' or 'no' to enable QEMU guest agent 'hw_qemu_guest_agent': fields.FlexibleBooleanField(), # name of the rescue bus to use with the associated rescue device. 'hw_rescue_bus': fields.DiskBusField(), # name of rescue device to use. 'hw_rescue_device': fields.BlockDeviceTypeField(), # name of the RNG device type eg virtio 'hw_rng_model': fields.RNGModelField(), # number of serial ports to create 'hw_serial_port_count': fields.IntegerField(), # name of the SCSI bus controller eg 'virtio-scsi', 'lsilogic', etc 'hw_scsi_model': fields.SCSIModelField(), # name of the video adapter model to use, eg cirrus, vga, xen, qxl 'hw_video_model': fields.VideoModelField(), # MB of video RAM to provide eg 64 'hw_video_ram': fields.IntegerField(), # name of a NIC device model eg virtio, e1000, rtl8139 'hw_vif_model': fields.VIFModelField(), # "xen" vs "hvm" 'hw_vm_mode': fields.VMModeField(), # action to take when watchdog device fires eg reset, poweroff, pause, # none 'hw_watchdog_action': fields.WatchdogActionField(), # boolean - If true, this will enable the virtio-multiqueue feature 'hw_vif_multiqueue_enabled': fields.FlexibleBooleanField(), # if true download using bittorrent 'img_bittorrent': fields.FlexibleBooleanField(), # Which data format the 'img_block_device_mapping' field is # using to represent the block device mapping 'img_bdm_v2': fields.FlexibleBooleanField(), # Block device mapping - the may can be in one or two completely # different formats. The 'img_bdm_v2' field determines whether # it is in legacy format, or the new current format. Ideally # we would have a formal data type for this field instead of a # dict, but with 2 different formats to represent this is hard. # See nova/block_device.py from_legacy_mapping() for the complex # conversion code. So for now leave it as a dict and continue # to use existing code that is able to convert dict into the # desired internal BDM formats 'img_block_device_mapping': fields.ListOfDictOfNullableStringsField(), # boolean - if True, and image cache set to "some" decides if image # should be cached on host when server is booted on that host 'img_cache_in_nova': fields.FlexibleBooleanField(), # Compression level for images. (1-9) 'img_compression_level': fields.IntegerField(), # hypervisor supported version, eg. '>=2.6' 'img_hv_requested_version': fields.VersionPredicateField(), # type of the hypervisor, eg kvm, ironic, xen 'img_hv_type': fields.HVTypeField(), # Whether the image needs/expected config drive 'img_config_drive': fields.ConfigDrivePolicyField(), # boolean flag to set space-saving or performance behavior on the # Datastore 'img_linked_clone': fields.FlexibleBooleanField(), # Image mappings - related to Block device mapping data - mapping # of virtual image names to device names. This could be represented # as a formal data type, but is left as dict for same reason as # img_block_device_mapping field. It would arguably make sense for # the two to be combined into a single field and data type in the # future. 'img_mappings': fields.ListOfDictOfNullableStringsField(), # image project id (set on upload) 'img_owner_id': fields.StringField(), # root device name, used in snapshotting eg /dev/<blah> 'img_root_device_name': fields.StringField(), # boolean - if false don't talk to nova agent 'img_use_agent': fields.FlexibleBooleanField(), # integer value 1 'img_version': fields.IntegerField(), # base64 of encoding of image signature 'img_signature': fields.StringField(), # string indicating hash method used to compute image signature 'img_signature_hash_method': fields.ImageSignatureHashTypeField(), # string indicating Castellan uuid of certificate # used to compute the image's signature 'img_signature_certificate_uuid': fields.UUIDField(), # string indicating type of key used to compute image signature 'img_signature_key_type': fields.ImageSignatureKeyTypeField(), # string of username with admin privileges 'os_admin_user': fields.StringField(), # string of boot time command line arguments for the guest kernel 'os_command_line': fields.StringField(), # the name of the specific guest operating system distro. This # is not done as an Enum since the list of operating systems is # growing incredibly fast, and valid values can be arbitrarily # user defined. Nova has no real need for strict validation so # leave it freeform 'os_distro': fields.StringField(), # boolean - if true, then guest must support disk quiesce # or snapshot operation will be denied 'os_require_quiesce': fields.FlexibleBooleanField(), # Secure Boot feature will be enabled by setting the "os_secure_boot" # image property to "required". Other options can be: "disabled" or # "optional". # "os:secure_boot" flavor extra spec value overrides the image property # value. 'os_secure_boot': fields.SecureBootField(), # boolean - if using agent don't inject files, assume someone else is # doing that (cloud-init) 'os_skip_agent_inject_files_at_boot': fields.FlexibleBooleanField(), # boolean - if using agent don't try inject ssh key, assume someone # else is doing that (cloud-init) 'os_skip_agent_inject_ssh': fields.FlexibleBooleanField(), # The guest operating system family such as 'linux', 'windows' - this # is a fairly generic type. For a detailed type consider os_distro # instead 'os_type': fields.OSTypeField(), } # The keys are the legacy property names and # the values are the current preferred names _legacy_property_map = { 'architecture': 'hw_architecture', 'owner_id': 'img_owner_id', 'vmware_disktype': 'hw_disk_type', 'vmware_image_version': 'img_version', 'vmware_ostype': 'os_distro', 'auto_disk_config': 'hw_auto_disk_config', 'ipxe_boot': 'hw_ipxe_boot', 'xenapi_device_id': 'hw_device_id', 'xenapi_image_compression_level': 'img_compression_level', 'vmware_linked_clone': 'img_linked_clone', 'xenapi_use_agent': 'img_use_agent', 'xenapi_skip_agent_inject_ssh': 'os_skip_agent_inject_ssh', 'xenapi_skip_agent_inject_files_at_boot': 'os_skip_agent_inject_files_at_boot', 'cache_in_nova': 'img_cache_in_nova', 'vm_mode': 'hw_vm_mode', 'bittorrent': 'img_bittorrent', 'mappings': 'img_mappings', 'block_device_mapping': 'img_block_device_mapping', 'bdm_v2': 'img_bdm_v2', 'root_device_name': 'img_root_device_name', 'hypervisor_version_requires': 'img_hv_requested_version', 'hypervisor_type': 'img_hv_type', }

- 主要是进行镜像与主机架构等属性的对比(nova/scheduler/filters/image_props_filter.py):

class ImagePropertiesFilter(filters.BaseHostFilter): """Filter compute nodes that satisfy instance image properties. The ImagePropertiesFilter filters compute nodes that satisfy any architecture, hypervisor type, or virtual machine mode properties specified on the instance's image properties. Image properties are contained in the image dictionary in the request_spec. """ RUN_ON_REBUILD = True # Image Properties and Compute Capabilities do not change within # a request run_filter_once_per_request = True def _instance_supported(self, host_state, image_props, hypervisor_version): img_arch = image_props.get('hw_architecture') img_h_type = image_props.get('img_hv_type') img_vm_mode = image_props.get('hw_vm_mode') checked_img_props = ( fields.Architecture.canonicalize(img_arch), fields.HVType.canonicalize(img_h_type), fields.VMMode.canonicalize(img_vm_mode) ) # Supported if no compute-related instance properties are specified if not any(checked_img_props): return True supp_instances = host_state.supported_instances # Not supported if an instance property is requested but nothing # advertised by the host. if not supp_instances: LOG.debug("Instance contains properties %(image_props)s, " "but no corresponding supported_instances are " "advertised by the compute node", {'image_props': image_props}) return False def _compare_props(props, other_props): for i in props: if i and i not in other_props: return False return True def _compare_product_version(hyper_version, image_props): version_required = image_props.get('img_hv_requested_version') if not(hypervisor_version and version_required): return True img_prop_predicate = versionpredicate.VersionPredicate( 'image_prop (%s)' % version_required) hyper_ver_str = versionutils.convert_version_to_str(hyper_version) return img_prop_predicate.satisfied_by(hyper_ver_str) for supp_inst in supp_instances: if _compare_props(checked_img_props, supp_inst): if _compare_product_version(hypervisor_version, image_props): return True LOG.debug("Instance contains properties %(image_props)s " "that are not provided by the compute node " "supported_instances %(supp_instances)s or " "hypervisor version %(hypervisor_version)s do not match", {'image_props': image_props, 'supp_instances': supp_instances, 'hypervisor_version': hypervisor_version}) return False def host_passes(self, host_state, spec_obj): """Check if host passes specified image properties. Returns True for compute nodes that satisfy image properties contained in the request_spec. """ image_props = spec_obj.image.properties if spec_obj.image else {} if not self._instance_supported(host_state, image_props, host_state.hypervisor_version): LOG.debug("%(host_state)s does not support requested " "instance_properties", {'host_state': host_state}) return False return True

####CoreFilter过滤器

CoreFilter和AggregateCoreFilter过滤器源码(nova/scheduler/filters/core_filter.py):

class BaseCoreFilter(filters.BaseHostFilter): RUN_ON_REBUILD = False def _get_cpu_allocation_ratio(self, host_state, spec_obj): raise NotImplementedError def host_passes(self, host_state, spec_obj): """Return True if host has sufficient CPU cores. :param host_state: nova.scheduler.host_manager.HostState :param spec_obj: filter options :return: boolean """ if not host_state.vcpus_total: # Fail safe LOG.warning(_LW("VCPUs not set; assuming CPU collection broken")) return True instance_vcpus = spec_obj.vcpus cpu_allocation_ratio = self._get_cpu_allocation_ratio(host_state, spec_obj) vcpus_total = host_state.vcpus_total * cpu_allocation_ratio # Only provide a VCPU limit to compute if the virt driver is reporting # an accurate count of installed VCPUs. (XenServer driver does not) if vcpus_total > 0: host_state.limits['vcpu'] = vcpus_total # Do not allow an instance to overcommit against itself, only # against other instances. if instance_vcpus > host_state.vcpus_total: LOG.debug("%(host_state)s does not have %(instance_vcpus)d " "total cpus before overcommit, it only has %(cpus)d", {'host_state': host_state, 'instance_vcpus': instance_vcpus, 'cpus': host_state.vcpus_total}) return False free_vcpus = vcpus_total - host_state.vcpus_used if free_vcpus < instance_vcpus: LOG.debug("%(host_state)s does not have %(instance_vcpus)d " "usable vcpus, it only has %(free_vcpus)d usable " "vcpus", {'host_state': host_state, 'instance_vcpus': instance_vcpus, 'free_vcpus': free_vcpus}) return False return True class CoreFilter(BaseCoreFilter): """CoreFilter filters based on CPU core utilization.""" def _get_cpu_allocation_ratio(self, host_state, spec_obj): return host_state.cpu_allocation_ratio class AggregateCoreFilter(BaseCoreFilter): """AggregateCoreFilter with per-aggregate CPU subscription flag. Fall back to global cpu_allocation_ratio if no per-aggregate setting found. """ def _get_cpu_allocation_ratio(self, host_state, spec_obj): aggregate_vals = utils.aggregate_values_from_key( host_state, 'cpu_allocation_ratio') try: ratio = utils.validate_num_values( aggregate_vals, host_state.cpu_allocation_ratio, cast_to=float) except ValueError as e: LOG.warning(_LW("Could not decode cpu_allocation_ratio: '%s'"), e) ratio = host_state.cpu_allocation_ratio return ratio

- 验证参数(nova/scheduler/filters/utils.py):

def validate_num_values(vals, default=None, cast_to=int, based_on=min): """Returns a correctly casted value based on a set of values. This method is useful to work with per-aggregate filters, It takes a set of values then return the 'based_on'{min/max} converted to 'cast_to' of the set or the default value. Note: The cast implies a possible ValueError """ num_values = len(vals) if num_values == 0: return default if num_values > 1: if based_on == min: LOG.info(_LI("%(num_values)d values found, " "of which the minimum value will be used."), {'num_values': num_values}) else: LOG.info(_LI("%(num_values)d values found, " "of which the maximum value will be used."), {'num_values': num_values}) return based_on([cast_to(val) for val in vals])

当节点所属的多个Host Aggregate 设置cpu_allocation_ratio`参数时,取较小值。

##容灾备份

节点划分

-

Region: 主要用于对集群的物理位置划分,每个 Region 有自己独立的EndPoint,Regions 之间完全隔离,但是多个 Regions 之间共享同一个 KeyStone 和DashBoard。

-

Availability Zone: 可以简单理解为一组节点的集合,这组节点具有独立的电力供应设备,比如一个独立供电的机房,一个独立供电的机架。

-

Host Aggregate: 主要用于管理员根据节点的属性来对硬件进行划分,只对管理员可见。

-

Cell: 主要用来解决OpenStack扩展性和规模瓶颈,对DataBase和AMQP等组件进行分割,实现分级调度。

总结:**

- 可以使用

Region 和 Availability Zone 来指定实例部署位置,并把对应的功能暴露给用户。也可以自行管理,向用户提供各种灾备选项。 - 可以使用

Cell 功能来划分集群,增强集群的横向扩展能力。 - 可以使用

Host Aggregate 功能来对主机的属性进行归类,也可以配合 AggregateInstanceExtraSpecsFilter 过滤器对主机进行不同调度策略的归类,再加上 flavor 的元数据就可以在一个集群中同时支持多种调度策略。 - 可以使用

ServerGroupAntiAffinityFilter 和 ServerGroupAffinityFilter 插件,使用 --hint 参数对虚拟机进行分组部署。

####多区域

- 多区域

Region,使用--os-region-name参数指定:

$ nova --help ... --os-region-name <region-name> Defaults to env[OS_REGION_NAME]. ...

####集合

目前在命令中,可用区和集合是使用同一类命令进行管理的。

- 多机房

Availability Zone(AvailabilityZoneFilter),多机架Host Aggregate(AggregateInstanceExtraSpecsFilter),配合元数据,使用如下命令进行管理:

$ nova --help ... aggregate-add-host Add the host to the specified aggregate. aggregate-create Create a new aggregate with the specified details. aggregate-delete Delete the aggregate. aggregate-list Print a list of all aggregates. aggregate-remove-host Remove the specified host from the specified aggregate. aggregate-set-metadata Update the metadata associated with the aggregate. aggregate-show Show details of the specified aggregate. aggregate-update Update the aggregate's name and optionally availability zone. availability-zone-list List all the availability zones. ...

###过滤器配置

新增AvailabilityZoneFilter过滤器:

$ vi /etc/kolla/nova-scheduler/nova.conf [DEFAULT] ... scheduler_default_filters = AggregateInstanceExtraSpecsFilter, RetryFilter, AvailabilityZoneFilter, RamFilter, DiskFilter, ComputeFilter, ComputeCapabilitiesFilter, ImagePropertiesFilter, ServerGroupAntiAffinityFilter, ServerGroupAffinityFilter ... $ docker restart nova_scheduler

调度测试

####可用区配置

- 这里假设有一个区域(Region)有2个机房,每个机房分别有2个机架:

主机 机房 机架 机架属性 osdev-01 az01 az01-ha01 addr=11 osdev-02 az01 az01-ha02 addr=12 osdev-03 az02 az02-ha01 addr=21 osdev-ceph az02 az02-ha02 addr=22 osdev-gpu az02 az02-ha02 addr=22

$ nova availability-zone-list +-----------------------+----------------------------------------+ | Name | Status | +-----------------------+----------------------------------------+ | internal | available | | |- osdev-01 | | | | |- nova-conductor | enabled :-) 2018-03-15T09:51:30.000000 | | | |- nova-scheduler | enabled :-) 2018-03-15T09:51:30.000000 | | | |- nova-consoleauth | enabled :-) 2018-03-15T09:51:31.000000 | | nova | available | | |- osdev-01 | | | | |- nova-compute | enabled :-) 2018-03-15T09:51:30.000000 | | |- osdev-02 | | | | |- nova-compute | enabled :-) 2018-03-15T09:51:25.000000 | | |- osdev-03 | | | | |- nova-compute | enabled :-) 2018-03-15T09:51:31.000000 | | |- osdev-ceph | | | | |- nova-compute | enabled :-) 2018-03-15T09:51:28.000000 | | |- osdev-gpu | | | | |- nova-compute | enabled :-) 2018-03-15T09:51:26.000000 | +-----------------------+----------------------------------------+

- 分别创建2个

Availability Zone和4个Host Aggregate:

$ nova aggregate-create az01-ha01 az01 +----+-----------+-------------------+-------+--------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+-------+--------------------------+--------------------------------------+ | 3 | az01-ha01 | az01 | | 'availability_zone=az01' | 3baac65d-2907-412a-98f5-60e582612548 | +----+-----------+-------------------+-------+--------------------------+--------------------------------------+ $ nova aggregate-create az01-ha02 az01 +----+-----------+-------------------+-------+--------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+-------+--------------------------+--------------------------------------+ | 4 | az01-ha02 | az01 | | 'availability_zone=az01' | 5ea0f221-024d-43f5-b1f1-6e0cc364ad39 | +----+-----------+-------------------+-------+--------------------------+--------------------------------------+ $ nova aggregate-create az02-ha01 az02 +----+-----------+-------------------+-------+--------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+-------+--------------------------+--------------------------------------+ | 5 | az02-ha01 | az02 | | 'availability_zone=az02' | 00cdcefa-bcd0-490c-bdee-9cc970268a03 | +----+-----------+-------------------+-------+--------------------------+--------------------------------------+ $ nova aggregate-create az02-ha02 az02 +----+-----------+-------------------+-------+--------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+-------+--------------------------+--------------------------------------+ | 6 | az02-ha02 | az02 | | 'availability_zone=az02' | 81de444f-4730-471a-bff5-ff11372c3096 | +----+-----------+-------------------+-------+--------------------------+--------------------------------------+

- 分别把5个节点加入4个

Host Agreegate中:

$ nova aggregate-add-host az01-ha01 osdev-01 Host osdev-01 has been successfully added for aggregate 3 +----+-----------+-------------------+------------+--------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+------------+--------------------------+--------------------------------------+ | 3 | az01-ha01 | az01 | 'osdev-01' | 'availability_zone=az01' | 3baac65d-2907-412a-98f5-60e582612548 | +----+-----------+-------------------+------------+--------------------------+--------------------------------------+ $ nova aggregate-add-host az01-ha02 osdev-02 Host osdev-02 has been successfully added for aggregate 4 +----+-----------+-------------------+------------+--------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+------------+--------------------------+--------------------------------------+ | 4 | az01-ha02 | az01 | 'osdev-02' | 'availability_zone=az01' | 5ea0f221-024d-43f5-b1f1-6e0cc364ad39 | +----+-----------+-------------------+------------+--------------------------+--------------------------------------+ $ nova aggregate-add-host az02-ha01 osdev-03 Host osdev-03 has been successfully added for aggregate 5 +----+-----------+-------------------+------------+--------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+------------+--------------------------+--------------------------------------+ | 5 | az02-ha01 | az02 | 'osdev-03' | 'availability_zone=az02' | 00cdcefa-bcd0-490c-bdee-9cc970268a03 | +----+-----------+-------------------+------------+--------------------------+--------------------------------------+ $ nova aggregate-add-host az02-ha02 osdev-ceph Host osdev-ceph has been successfully added for aggregate 6 +----+-----------+-------------------+--------------+--------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+--------------+--------------------------+--------------------------------------+ | 6 | az02-ha02 | az02 | 'osdev-ceph' | 'availability_zone=az02' | 81de444f-4730-471a-bff5-ff11372c3096 | +----+-----------+-------------------+--------------+--------------------------+--------------------------------------+ $ nova aggregate-add-host az02-ha02 osdev-gpu Host osdev-gpu has been successfully added for aggregate 6 +----+-----------+-------------------+---------------------------+--------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+---------------------------+--------------------------+--------------------------------------+ | 6 | az02-ha02 | az02 | 'osdev-ceph', 'osdev-gpu' | 'availability_zone=az02' | 81de444f-4730-471a-bff5-ff11372c3096 | +----+-----------+-------------------+---------------------------+--------------------------+--------------------------------------+

$ nova availability-zone-list +-----------------------+----------------------------------------+ | Name | Status | +-----------------------+----------------------------------------+ | internal | available | | |- osdev-01 | | | | |- nova-conductor | enabled :-) 2018-03-15T10:09:10.000000 | | | |- nova-scheduler | enabled :-) 2018-03-15T10:09:10.000000 | | | |- nova-consoleauth | enabled :-) 2018-03-15T10:09:01.000000 | | az02 | available | | |- osdev-03 | | | | |- nova-compute | enabled :-) 2018-03-15T10:09:01.000000 | | |- osdev-ceph | | | | |- nova-compute | enabled :-) 2018-03-15T10:09:08.000000 | | |- osdev-gpu | | | | |- nova-compute | enabled :-) 2018-03-15T10:09:05.000000 | | az01 | available | | |- osdev-01 | | | | |- nova-compute | enabled :-) 2018-03-15T10:09:10.000000 | | |- osdev-02 | | | | |- nova-compute | enabled :-) 2018-03-15T10:09:05.000000 | +-----------------------+----------------------------------------+

####指定可用区

- 在

az02上创建一个实(被调度到osdev-gpu上):

$ openstack network list +--------------------------------------+----------+--------------------------------------+ | ID | Name | Subnets | +--------------------------------------+----------+--------------------------------------+ | 8ab35b74-d680-4cfc-8c61-810965e3992e | public1 | 2e6f24b8-3482-4e68-9d61-6306ff1da8a2 | | 8d01509e-4a3a-497a-9118-3827c1e37672 | demo-net | 3b817b11-8fda-485f-bad9-0b7e30534d66 | +--------------------------------------+----------+--------------------------------------+ $ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --availability-zone az02 demo1 $ openstack server list --long --column "Name" --column "Status" --column "Flavor Name" --column "Networks" --column "Availability Zone" --column "Host" +-------+--------+--------------------+-------------------+-----------+ | Name | Status | Networks | Availability Zone | Host | +-------+--------+--------------------+-------------------+-----------+ | demo1 | ACTIVE | demo-net=10.0.0.11 | az02 | osdev-gpu | +-------+--------+--------------------+-------------------+-----------+

- 在

az01上创建一个实(被调度到osdev-02上):

$ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --availability-zone az01 demo2 $ openstack server list --long --column "Name" --column "Status" --column "Flavor Name" --column "Networks" --column "Availability Zone" --column "Host" +-------+--------+--------------------+-------------------+-----------+ | Name | Status | Networks | Availability Zone | Host | +-------+--------+--------------------+-------------------+-----------+ | demo2 | ACTIVE | demo-net=10.0.0.3 | az01 | osdev-02 | | demo1 | ACTIVE | demo-net=10.0.0.11 | az02 | osdev-gpu | +-------+--------+--------------------+-------------------+-----------+

####集合配置

$ nova aggregate-set-metadata az01-ha01 addr=11 Metadata has been successfully updated for aggregate 3. +----+-----------+-------------------+------------+-------------------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+------------+-------------------------------------+--------------------------------------+ | 3 | az01-ha01 | az01 | 'osdev-01' | 'addr=11', 'availability_zone=az01' | 3baac65d-2907-412a-98f5-60e582612548 | +----+-----------+-------------------+------------+-------------------------------------+--------------------------------------+ $ nova aggregate-set-metadata az01-ha02 addr=12 Metadata has been successfully updated for aggregate 4. +----+-----------+-------------------+------------+-------------------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+------------+-------------------------------------+--------------------------------------+ | 4 | az01-ha02 | az01 | 'osdev-02' | 'addr=12', 'availability_zone=az01' | 5ea0f221-024d-43f5-b1f1-6e0cc364ad39 | +----+-----------+-------------------+------------+-------------------------------------+--------------------------------------+ $ nova aggregate-set-metadata az02-ha01 addr=21 Metadata has been successfully updated for aggregate 5. +----+-----------+-------------------+------------+-------------------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+------------+-------------------------------------+--------------------------------------+ | 5 | az02-ha01 | az02 | 'osdev-03' | 'addr=21', 'availability_zone=az02' | 00cdcefa-bcd0-490c-bdee-9cc970268a03 | +----+-----------+-------------------+------------+-------------------------------------+--------------------------------------+ $ nova aggregate-set-metadata az02-ha02 addr=22 Metadata has been successfully updated for aggregate 6. +----+-----------+-------------------+---------------------------+-------------------------------------+--------------------------------------+ | Id | Name | Availability Zone | Hosts | Metadata | UUID | +----+-----------+-------------------+---------------------------+-------------------------------------+--------------------------------------+ | 6 | az02-ha02 | az02 | 'osdev-ceph', 'osdev-gpu' | 'addr=22', 'availability_zone=az02' | 81de444f-4730-471a-bff5-ff11372c3096 | +----+-----------+-------------------+---------------------------+-------------------------------------+--------------------------------------+

####模板配置

$ openstack flavor create --vcpus 1 --ram 64 --disk 1 machine.az01-ha01 +----------------------------+--------------------------------------+ | Field | Value | +----------------------------+--------------------------------------+ | OS-FLV-DISABLED:disabled | False | | OS-FLV-EXT-DATA:ephemeral | 0 | | disk | 1 | | id | 0c3bb453-146f-4093-b161-39c10978f0eb | | name | machine.az01-ha01 | | os-flavor-access:is_public | True | | properties | | | ram | 64 | | rxtx_factor | 1.0 | | swap | | | vcpus | 1 | +----------------------------+--------------------------------------+ $ openstack flavor create --vcpus 1 --ram 64 --disk 1 machine.az01-ha02 +----------------------------+--------------------------------------+ | Field | Value | +----------------------------+--------------------------------------+ | OS-FLV-DISABLED:disabled | False | | OS-FLV-EXT-DATA:ephemeral | 0 | | disk | 1 | | id | ba9a94aa-f841-4529-8d34-e3e9e8484f90 | | name | machine.az01-ha02 | | os-flavor-access:is_public | True | | properties | | | ram | 64 | | rxtx_factor | 1.0 | | swap | | | vcpus | 1 | +----------------------------+--------------------------------------+ $ openstack flavor create --vcpus 1 --ram 64 --disk 1 machine.az02-ha01 +----------------------------+--------------------------------------+ | Field | Value | +----------------------------+--------------------------------------+ | OS-FLV-DISABLED:disabled | False | | OS-FLV-EXT-DATA:ephemeral | 0 | | disk | 1 | | id | 326d7246-9d6a-4b73-8e89-83565887ada7 | | name | machine.az02-ha01 | | os-flavor-access:is_public | True | | properties | | | ram | 64 | | rxtx_factor | 1.0 | | swap | | | vcpus | 1 | +----------------------------+--------------------------------------+ $ openstack flavor create --vcpus 1 --ram 64 --disk 1 machine.az02-ha02 +----------------------------+--------------------------------------+ | Field | Value | +----------------------------+--------------------------------------+ | OS-FLV-DISABLED:disabled | False | | OS-FLV-EXT-DATA:ephemeral | 0 | | disk | 1 | | id | f3fb1e5f-adcd-402c-9125-56dda997b52a | | name | machine.az02-ha02 | | os-flavor-access:is_public | True | | properties | | | ram | 64 | | rxtx_factor | 1.0 | | swap | | | vcpus | 1 | +----------------------------+--------------------------------------+

$ nova flavor-key machine.az01-ha01 set addr=11 $ nova flavor-key machine.az01-ha02 set addr=12 $ nova flavor-key machine.az02-ha01 set addr=21 $ nova flavor-key machine.az02-ha02 set addr=22 $ openstack flavor list --long --column "Name" --column "Properties" +-------------------+------------+ | Name | Properties | +-------------------+------------+ | machine.az01-ha01 | addr='11' | | m1.tiny | | | m1.small | | | m1.medium | | | machine.az02-ha01 | addr='21' | | m1.large | | | m1.xlarge | | | machine.az01-ha02 | addr='12' | | machine.az02-ha02 | addr='22' | +-------------------+------------+

####指定集合

使用带有addr元数据的flavor创建虚拟机:

$ openstack server create --image cirros --flavor machine.az01-ha01 --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 server.az01-ha01 $ openstack server create --image cirros --flavor machine.az01-ha02 --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 server.az01-ha02 $ openstack server create --image cirros --flavor machine.az02-ha01 --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 server.az02-ha01 $ openstack server create --image cirros --flavor machine.az02-ha02 --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 server.az02-ha02 $ openstack server list --long --column "Name" --column "Status" --column "Networks" --column "Availability Zone" --column "Host" +------------------+--------+--------------------+-------------------+-----------+ | Name | Status | Networks | Availability Zone | Host | +------------------+--------+--------------------+-------------------+-----------+ | server.az02-ha02 | ACTIVE | demo-net=10.0.0.15 | az02 | osdev-gpu | | server.az02-ha01 | ACTIVE | demo-net=10.0.0.12 | az02 | osdev-03 | | server.az01-ha02 | ACTIVE | demo-net=10.0.0.6 | az01 | osdev-02 | | server.az01-ha01 | ACTIVE | demo-net=10.0.0.4 | az01 | osdev-01 | +------------------+--------+--------------------+-------------------+-----------+

###相关源码

- 可用区过滤器源码(nova/scheduler/filters/availability_zone_filter.py):

class AvailabilityZoneFilter(filters.BaseHostFilter): """Filters Hosts by availability zone. Works with aggregate metadata availability zones, using the key 'availability_zone' Note: in theory a compute node can be part of multiple availability_zones """ # Availability zones do not change within a request run_filter_once_per_request = True RUN_ON_REBUILD = False def host_passes(self, host_state, spec_obj): availability_zone = spec_obj.availability_zone if not availability_zone: return True metadata = utils.aggregate_metadata_get_by_host( host_state, key='availability_zone') if 'availability_zone' in metadata: hosts_passes = availability_zone in metadata['availability_zone'] host_az = metadata['availability_zone'] else: hosts_passes = availability_zone == CONF.default_availability_zone host_az = CONF.default_availability_zone if not hosts_passes: LOG.debug("Availability Zone '%(az)s' requested. " "%(host_state)s has AZs: %(host_az)s", {'host_state': host_state, 'az': availability_zone, 'host_az': host_az}) return hosts_passes

可以看到Availability Zone的判断,首先比较节点的元数据,如果不存在则使用节点的默认配置。

- 获取节点的元数据(nova/scheduler/filters/utils.py):

def aggregate_metadata_get_by_host(host_state, key=None): """Returns a dict of all metadata based on a metadata key for a specific host. If the key is not provided, returns a dict of all metadata. """ aggrlist = host_state.aggregates metadata = collections.defaultdict(set) for aggr in aggrlist: if key is None or key in aggr.metadata: for k, v in aggr.metadata.items(): metadata[k].update(x.strip() for x in v.split(',')) return metadata

##亲和性

过滤器配置

- 新增

SameHostFilter、DifferentHostFilter、ServerGroupAntiAffinityFilter和ServerGroupAffinityFilter过滤器:

$ vi /etc/kolla/nova-scheduler/nova.conf [DEFAULT] ... scheduler_default_filters = ServerGroupAntiAffinityFilter, ServerGroupAffinityFilter, SameHostFilter, DifferentHostFilter, AggregateInstanceExtraSpecsFilter, RetryFilter, AvailabilityZoneFilter, RamFilter, DiskFilter, ComputeFilter, ComputeCapabilitiesFilter, ImagePropertiesFilter, ServerGroupAntiAffinityFilter, ServerGroupAffinityFilter ... $ docker restart nova_scheduler

亲和性测试

####相同主机

- 在实例

server.az02-ha02所在节点上新建实例:

$ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint same_host=6a928cc0-1509-4e00-91c8-6b43ceb05373 server.same $ openstack server list --long --column "ID" --column "Name" --column "Status" --column "Networks" --column "Availability Zone" --column "Host" +--------------------------------------+------------------+--------+--------------------+-------------------+-----------+ | ID | Name | Status | Networks | Availability Zone | Host | +--------------------------------------+------------------+--------+--------------------+-------------------+-----------+ | aadc3d24-965f-437d-af40-70adee984cad | server.same | ACTIVE | demo-net=10.0.0.10 | az02 | osdev-gpu | | 6a928cc0-1509-4e00-91c8-6b43ceb05373 | server.az02-ha02 | ACTIVE | demo-net=10.0.0.15 | az02 | osdev-gpu | | 3605afc0-7a8e-4ae9-a0cf-e0df64f6bfd6 | server.az02-ha01 | ACTIVE | demo-net=10.0.0.12 | az02 | osdev-03 | | cdec034a-bdca-4651-88d5-c34d17ea12f1 | server.az01-ha02 | ACTIVE | demo-net=10.0.0.6 | az01 | osdev-02 | | 276a4af1-762b-43c9-a064-7f1b27d46356 | server.az01-ha01 | ACTIVE | demo-net=10.0.0.4 | az01 | osdev-01 | +--------------------------------------+------------------+--------+--------------------+-------------------+-----------+

####不同主机

在实例server.az02-ha02以外的节点上新建实例:

$ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint different_host=6a928cc0-1509-4e00-91c8-6b43ceb05373 server.different $ openstack server list --long --column "ID" --column "Name" --column "Status" --column "Networks" --column "Availability Zone" --column "Host" +--------------------------------------+------------------+--------+--------------------+-------------------+-----------+ | ID | Name | Status | Networks | Availability Zone | Host | +--------------------------------------+------------------+--------+--------------------+-------------------+-----------+ | d652614a-ce1b-4314-8c13-33c77527950d | server.different | ACTIVE | demo-net=10.0.0.8 | az02 | osdev-03 | | aadc3d24-965f-437d-af40-70adee984cad | server.same | ACTIVE | demo-net=10.0.0.10 | az02 | osdev-gpu | | 6a928cc0-1509-4e00-91c8-6b43ceb05373 | server.az02-ha02 | ACTIVE | demo-net=10.0.0.15 | az02 | osdev-gpu | | 3605afc0-7a8e-4ae9-a0cf-e0df64f6bfd6 | server.az02-ha01 | ACTIVE | demo-net=10.0.0.12 | az02 | osdev-03 | | cdec034a-bdca-4651-88d5-c34d17ea12f1 | server.az01-ha02 | ACTIVE | demo-net=10.0.0.6 | az01 | osdev-02 | | 276a4af1-762b-43c9-a064-7f1b27d46356 | server.az01-ha01 | ACTIVE | demo-net=10.0.0.4 | az01 | osdev-01 | +--------------------------------------+------------------+--------+--------------------+-------------------+-----------+

####分散调度

$ openstack server group create --policy anti-affinity group-anti-affinity +----------+--------------------------------------+ | Field | Value | +----------+--------------------------------------+ | id | 855ea22c-d369-4e90-a7b1-318064c72b16 | | members | | | name | group-anti-affinity | | policies | anti-affinity | +----------+--------------------------------------+

$ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint group=855ea22c-d369-4e90-a7b1-318064c72b16 server.aa1 $ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint group=855ea22c-d369-4e90-a7b1-318064c72b16 server.aa2 $ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint group=855ea22c-d369-4e90-a7b1-318064c72b16 server.aa3 $ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint group=855ea22c-d369-4e90-a7b1-318064c72b16 server.aa4 $ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint group=855ea22c-d369-4e90-a7b1-318064c72b16 server.aa5 $ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint group=855ea22c-d369-4e90-a7b1-318064c72b16 server.aa6 $ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint group=855ea22c-d369-4e90-a7b1-318064c72b16 server.aa7 $ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint group=855ea22c-d369-4e90-a7b1-318064c72b16 server.aa8

$ openstack server list --long --column "Name" --column "Status" --column "Networks" --column "Availability Zone" --column "Host" +--------------------+--------+--------------------+-------------------+------------+ | Name | Status | Networks | Availability Zone | Host | +--------------------+--------+--------------------+-------------------+------------+ | server.aa8 | ERROR | | | None | | server.aa7 | ERROR | | | None | | server.aa6 | ERROR | | | None | | server.aa5 | ACTIVE | demo-net=10.0.0.10 | az02 | osdev-ceph | | server.aa4 | ACTIVE | demo-net=10.0.0.7 | az01 | osdev-01 | | server.aa3 | ACTIVE | demo-net=10.0.0.18 | az01 | osdev-02 | | server.aa2 | ACTIVE | demo-net=10.0.0.4 | az02 | osdev-03 | | server.aa1 | ACTIVE | demo-net=10.0.0.11 | az02 | osdev-gpu | +--------------------+--------+--------------------+-------------------+------------+

####聚合调度

$ openstack server group create --policy affinity group-affinity +----------+--------------------------------------+ | Field | Value | +----------+--------------------------------------+ | id | d44f51d3-676e-4bca-ac56-76e998da9467 | | members | | | name | group-affinity | | policies | affinity | +----------+--------------------------------------+

$ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint group=d44f51d3-676e-4bca-ac56-76e998da9467 server.a1 $ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint group=d44f51d3-676e-4bca-ac56-76e998da9467 server.a2 $ openstack server create --image cirros --flavor m1.tiny --key-name mykey --nic net-id=8d01509e-4a3a-497a-9118-3827c1e37672 --hint group=d44f51d3-676e-4bca-ac56-76e998da9467 server.a3

$ openstack server list --long --column "Name" --column "Status" --column "Networks" --column "Availability Zone" --column "Host" +------------------+--------+--------------------+-------------------+------------+ | Name | Status | Networks | Availability Zone | Host | +------------------+--------+--------------------+-------------------+------------+ | server.a3 | ACTIVE | demo-net=10.0.0.24 | az02 | osdev-gpu | | server.a2 | ACTIVE | demo-net=10.0.0.20 | az02 | osdev-gpu | | server.a1 | ACTIVE | demo-net=10.0.0.19 | az02 | osdev-gpu | +------------------+--------+--------------------+-------------------+------------+

###相关源码

- 判断虚拟机是否在该节点(nova/scheduler/filters/utils.py):

def instance_uuids_overlap(host_state, uuids): """Tests for overlap between a host_state and a list of uuids. Returns True if any of the supplied uuids match any of the instance.uuid values in the host_state. """ if isinstance(uuids, six.string_types): uuids = [uuids] set_uuids = set(uuids) # host_state.instances is a dict whose keys are the instance uuids host_uuids = set(host_state.instances.keys()) return bool(host_uuids.intersection(set_uuids))

- 相同主机过滤器(nova/scheduler/filters/affinity_filter.py):

class SameHostFilter(filters.BaseHostFilter): """Schedule the instance on the same host as another instance in a set of instances. """ # The hosts the instances are running on doesn't change within a request run_filter_once_per_request = True RUN_ON_REBUILD = False def host_passes(self, host_state, spec_obj): affinity_uuids = spec_obj.get_scheduler_hint('same_host') if affinity_uuids: overlap = utils.instance_uuids_overlap(host_state, affinity_uuids) return overlap # With no same_host key return True

- 不同主机过滤器(nova/scheduler/filters/affinity_filter.py):

class DifferentHostFilter(filters.BaseHostFilter): """Schedule the instance on a different host from a set of instances.""" # The hosts the instances are running on doesn't change within a request run_filter_once_per_request = True RUN_ON_REBUILD = False def host_passes(self, host_state, spec_obj): affinity_uuids = spec_obj.get_scheduler_hint('different_host') if affinity_uuids: overlap = utils.instance_uuids_overlap(host_state, affinity_uuids) return not overlap # With no different_host key return True

- 分散过滤器源码(nova/scheduler/filters/affinity_filter.py):

class _GroupAntiAffinityFilter(filters.BaseHostFilter): """Schedule the instance on a different host from a set of group hosts. """ RUN_ON_REBUILD = False def host_passes(self, host_state, spec_obj): # Only invoke the filter if 'anti-affinity' is configured policies = (spec_obj.instance_group.policies if spec_obj.instance_group else []) if self.policy_name not in policies: return True # NOTE(hanrong): Move operations like resize can check the same source # compute node where the instance is. That case, AntiAffinityFilter # must not return the source as a non-possible destination. if spec_obj.instance_uuid in host_state.instances.keys(): return True group_hosts = (spec_obj.instance_group.hosts if spec_obj.instance_group else []) LOG.debug("Group anti affinity: check if %(host)s not " "in %(configured)s", {'host': host_state.host, 'configured': group_hosts}) if group_hosts: return host_state.host not in group_hosts # No groups configured return True class ServerGroupAntiAffinityFilter(_GroupAntiAffinityFilter): def __init__(self): self.policy_name = 'anti-affinity' super(ServerGroupAntiAffinityFilter, self).__init__()

不能在一个分组中重复调度虚拟机。

- 聚合过滤器源码(nova/scheduler/filters/affinity_filter.py):

class _GroupAffinityFilter(filters.BaseHostFilter): """Schedule the instance on to host from a set of group hosts. """ RUN_ON_REBUILD = False def host_passes(self, host_state, spec_obj): # Only invoke the filter if 'affinity' is configured policies = (spec_obj.instance_group.policies if spec_obj.instance_group else []) if self.policy_name not in policies: return True group_hosts = (spec_obj.instance_group.hosts if spec_obj.instance_group else []) LOG.debug("Group affinity: check if %(host)s in " "%(configured)s", {'host': host_state.host, 'configured': group_hosts}) if group_hosts: return host_state.host in group_hosts # No groups configured return True class ServerGroupAffinityFilter(_GroupAffinityFilter): def __init__(self): self.policy_name = 'affinity' super(ServerGroupAffinityFilter, self).__init__()

##NUMA绑定

###参数配置

- 为Flavor添加元数据,即extra-specs,通过设置以下几种关键字:

hw:numa_nodes=N - VM中NUMA的个数 hw:numa_mempolicy=preferred|strict - VM中 NUMA 内存的使用策略 hw:numa_cpus.0=<cpu-list> - VM 中在NUMA node 0的cpu hw:numa_cpus.1=<cpu-list> - VM 中在NUMA node 1的cpu hw:numa_mem.0=<ram-size> - VM 中在NUMA node 0的内存大小(M) hw:numa_mem.1=<ram-size> - VM 中在NUMA node 1的内存大小(M)

- 为Image添加元数据,即Image的metadata,通过设置以下几种关键字:

hw_numa_nodes=N - numa of NUMA nodes to expose to the guest. hw_numa_mempolicy=preferred|strict - memory allocation policy hw_numa_cpus.0=<cpu-list> - mapping of vCPUS N-M to NUMA node 0 hw_numa_cpus.1=<cpu-list> - mapping of vCPUS N-M to NUMA node 1 hw_numa_mem.0=<ram-size> - mapping N MB of RAM to NUMA node 0 hw_numa_mem.1=<ram-size> - mapping N MB of RAM to NUMA node 1

numa_nodes:该虚拟机包含的 NUMA 节点个数; numa_cpus.0:虚拟机上 NUMA 节点 0 包含的虚拟机 CPU 的 ID,格式"1-4,6",如果用户自己指定部署方式,则需要指定虚拟机内每个 NUMA 节点的 CPU 部署信息,所有 NUMA 节点上的 CPU 总和需要与套餐中 vcpus 的总数一致; numa_mem.0:虚拟机上 NUMA 节点 0 包含的内存大小,单位 M,如果用户自己指定部署方式,则需要指定虚拟机内每个 NUMA 节点的内存信息,所有 NUMA 节点上的内存总和需要等于套餐中的 memory_mb 大小。

注意:

N 是虚拟机 NUMA 节点的索引,并不一定对应主机 NUMA 节点。 例如,在两个 NUMA 节点的平台,根据 hw:numa_mem.0,调度会选择虚拟机 NUMA 节点 0,但是却是在主机 NUMA 节点 1 上,反之亦然。类似的,FLAVOR-CORES 也是虚拟机 vCPU 的编号,并不对应与主机 CPU。因此,这个特性不能用来约束虚拟机所处的主机 CPU 与 NUMA 节点。

警告:

如果 hw:numa_cpus.N 或 hw:numa_mem.N 的值比可用 CPU 或内存大,则会引发错误。

1、不能设置 numa_cpus 和 numa_mem; 2、自动从 0 节点开始平均分配。

1、用户指定的 CPU 总数需要与套餐中的 CPU 个数一致; 2、用户指定的内存总数需要与套餐中的内存总数一致; 3、必须设置 numa_cpus 和 numa_mem; 4、需要从 0 开始指定各个 numa 节点的资源占用 。

###过滤器配置

新增NUMATopologyFilter过滤器:

$ vi /etc/kolla/nova-scheduler/nova.conf [DEFAULT] ... scheduler_default_filters = NUMATopologyFilter, ServerGroupAntiAffinityFilter, ServerGroupAffinityFilter, SameHostFilter, DifferentHostFilter, AggregateInstanceExtraSpecsFilter, RetryFilter, AvailabilityZoneFilter, RamFilter, DiskFilter, ComputeFilter, ComputeCapabilitiesFilter, ImagePropertiesFilter, ServerGroupAntiAffinityFilter, ServerGroupAffinityFilter ... $ docker restart nova_scheduler

###CPU查看

全局信息

$ virsh nodeinfo CPU 型号: x86_64 CPU: 72 CPU 频率: 1963 MHz CPU socket: 1 每个 socket 的内核数: 18 每个内核的线程数: 2 NUMA 单元: 2 内存大小: 401248288 KiB

- 从

numactl中查看(需安装numactl软件包):

$ numactl --hardware available: 2 nodes (0-1) node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 node 0 size: 195236 MB node 0 free: 153414 MB node 1 cpus: 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 node 1 size: 196608 MB node 1 free: 167539 MB node distances: node 0 1 0: 10 21 1: 21 10 $ numactl --show policy: default preferred node: current physcpubind: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 cpubind: 0 1 nodebind: 0 1 membind: 0 1

- 查看

NUMA内存分配情况(等同于cat /sys/devices/system/node/node0/numastat命令):

$ numastat node0 node1 numa_hit 25692061493 30097928824 numa_miss 0 0 numa_foreign 0 0 interleave_hit 110618 109691 local_node 25689725277 30096685088 other_node 2336216 1243736

numa_hit是打算在该节点上分配内存,最后从这个节点分配的次数;

num_miss是打算在该节点分配内存,最后却从其他节点分配的次数(此数值偏高时说明要调整分配策略);

num_foregin是打算在其他节点分配内存,最后却从这个节点分配的次数;

interleave_hit是采用interleave策略最后从该节点分配的次数;

local_node该节点上的进程在该节点上分配的次数;

other_node是其他节点进程在该节点上分配的次数。

- 查看个CPU负载情况(需安装

sysstat软件包):

$ mpstat -P ALL Linux 3.10.0-693.17.1.el7.x86_64 (osdev-01) 2018年03月19日 _x86_64_ (72 CPU) 16时28分12秒 CPU %usr %nice %sys %iowait %irq %soft %steal %guest %gnice %idle 16时28分12秒 all 2.38 8.79 0.70 0.00 0.00 0.01 0.00 0.00 0.00 88.11 16时28分12秒 0 8.83 3.29 1.50 0.00 0.00 0.30 0.00 0.00 0.00 86.09 16时28分12秒 1 7.54 2.88 1.29 0.00 0.00 0.05 0.00 0.00 0.00 88.24 16时28分12秒 2 7.60 2.89 1.30 0.00 0.00 0.03 0.00 0.00 0.00 88.18 16时28分12秒 3 6.97 2.93 1.20 0.00 0.00 0.02 0.00 0.00 0.00 88.87 16时28分12秒 4 3.91 5.84 0.87 0.00 0.00 0.02 0.00 0.00 0.00 89.36 16时28分12秒 5 3.95 5.64 0.88 0.00 0.00 0.01 0.00 0.00 0.00 89.52 16时28分12秒 6 3.14 7.24 0.80 0.00 0.00 0.01 0.00 0.00 0.00 88.80 16时28分12秒 7 2.38 8.41 0.74 0.01 0.00 0.01 0.00 0.00 0.00 88.46 16时28分12秒 8 2.34 9.37 0.76 0.01 0.00 0.01 0.00 0.00 0.00 87.53 16时28分12秒 9 2.19 9.73 0.75 0.01 0.00 0.01 0.00 0.00 0.00 87.32 16时28分12秒 10 2.12 10.09 0.75 0.01 0.00 0.01 0.00 0.00 0.00 87.03 16时28分12秒 11 2.10 10.44 0.74 0.01 0.00 0.01 0.00 0.00 0.00 86.71 16时28分12秒 12 2.04 10.72 0.75 0.01 0.00 0.01 0.00 0.00 0.00 86.48 16时28分12秒 13 2.00 11.14 0.76 0.01 0.00 0.01 0.00 0.00 0.00 86.10 16时28分12秒 14 2.00 11.52 0.76 0.01 0.00 0.01 0.00 0.00 0.00 85.70 16时28分12秒 15 1.97 11.80 0.76 0.01 0.00 0.01 0.00 0.00 0.00 85.45 16时28分12秒 16 1.97 12.03 0.76 0.01 0.00 0.01 0.00 0.00 0.00 85.22 16时28分12秒 17 1.96 12.20 0.76 0.01 0.00 0.01 0.00 0.00 0.00 85.06 16时28分12秒 18 2.17 17.85 0.97 0.01 0.00 0.00 0.00 0.00 0.00 79.00 16时28分12秒 19 2.55 17.04 0.99 0.01 0.00 0.00 0.00 0.00 0.00 79.41 16时28分12秒 20 2.72 17.36 1.07 0.01 0.00 0.00 0.00 0.00 0.00 78.84 16时28分12秒 21 2.61 17.27 1.03 0.01 0.00 0.00 0.00 0.00 0.00 79.07 16时28分12秒 22 2.37 17.39 1.00 0.01 0.00 0.00 0.00 0.00 0.00 79.23 16时28分12秒 23 2.16 17.50 0.99 0.01 0.00 0.00 0.00 0.00 0.00 79.35 16时28分12秒 24 2.05 17.52 0.98 0.01 0.00 0.00 0.00 0.00 0.00 79.44 16时28分12秒 25 1.98 17.52 0.97 0.01 0.00 0.00 0.00 0.00 0.00 79.53 16时28分12秒 26 1.93 17.47 0.97 0.01 0.00 0.00 0.00 0.00 0.00 79.62 16时28分12秒 27 1.89 17.50 0.97 0.01 0.00 0.00 0.00 0.00 0.00 79.63 16时28分12秒 28 1.85 17.51 0.96 0.01 0.00 0.00 0.00 0.00 0.00 79.66 16时28分12秒 29 1.83 17.50 0.96 0.01 0.00 0.00 0.00 0.00 0.00 79.70 16时28分12秒 30 1.82 17.46 0.96 0.01 0.00 0.00 0.00 0.00 0.00 79.76 16时28分12秒 31 1.78 17.42 0.95 0.01 0.00 0.00 0.00 0.00 0.00 79.83 16时28分12秒 32 1.79 17.36 0.94 0.01 0.00 0.00 0.00 0.00 0.00 79.90 16时28分12秒 33 1.77 17.34 0.94 0.01 0.00 0.00 0.00 0.00 0.00 79.94 16时28分12秒 34 1.75 17.33 0.94 0.01 0.00 0.00 0.00 0.00 0.00 79.97 16时28分12秒 35 1.73 17.30 0.94 0.01 0.00 0.00 0.00 0.00 0.00 80.02 16时28分12秒 36 4.90 3.96 0.61 0.00 0.00 0.00 0.00 0.00 0.00 90.53 16时28分12秒 37 9.35 6.24 1.46 0.00 0.00 0.00 0.00 0.00 0.00 82.95 16时28分12秒 38 5.90 4.43 0.87 0.00 0.00 0.00 0.00 0.00 0.00 88.79 16时28分12秒 39 6.10 3.83 0.81 0.00 0.00 0.00 0.00 0.00 0.00 89.25 16时28分12秒 40 2.95 3.55 0.53 0.00 0.00 0.00 0.00 0.00 0.00 92.95 16时28分12秒 41 2.28 3.48 0.44 0.00 0.00 0.00 0.00 0.00 0.00 93.79 16时28分12秒 42 1.44 3.34 0.35 0.00 0.00 0.00 0.00 0.00 0.00 94.87 16时28分12秒 43 0.94 3.24 0.30 0.00 0.00 0.00 0.00 0.00 0.00 95.52 16时28分12秒 44 0.89 3.41 0.29 0.00 0.00 0.00 0.00 0.00 0.00 95.41 16时28分12秒 45 0.86 3.43 0.28 0.00 0.00 0.00 0.00 0.00 0.00 95.42 16时28分12秒 46 0.83 3.44 0.28 0.00 0.00 0.00 0.00 0.00 0.00 95.45 16时28分12秒 47 0.84 3.43 0.27 0.00 0.00 0.00 0.00 0.00 0.00 95.46 16时28分12秒 48 0.83 3.50 0.27 0.00 0.00 0.00 0.00 0.00 0.00 95.40 16时28分12秒 49 0.88 3.31 0.27 0.00 0.00 0.00 0.00 0.00 0.00 95.53 16时28分12秒 50 0.91 3.16 0.27 0.00 0.00 0.00 0.00 0.00 0.00 95.65 16时28分12秒 51 0.90 3.15 0.27 0.00 0.00 0.00 0.00 0.00 0.00 95.68 16时28分12秒 52 0.89 3.19 0.27 0.00 0.00 0.00 0.00 0.00 0.00 95.65 16时28分12秒 53 0.91 3.40 0.28 0.00 0.00 0.00 0.00 0.00 0.00 95.40 16时28分12秒 54 1.00 5.91 0.37 0.00 0.00 0.00 0.00 0.00 0.00 92.71 16时28分12秒 55 5.70 7.12 1.16 0.00 0.00 0.00 0.00 0.00 0.00 86.01 16时28分12秒 56 2.49 6.33 0.69 0.00 0.00 0.00 0.00 0.00 0.00 90.49 16时28分12秒 57 2.93 6.12 0.66 0.00 0.00 0.00 0.00 0.00 0.00 90.29 16时28分12秒 58 2.15 6.06 0.60 0.00 0.00 0.00 0.00 0.00 0.00 91.19 16时28分12秒 59 1.52 6.15 0.56 0.00 0.00 0.00 0.00 0.00 0.00 91.77 16时28分12秒 60 1.23 6.25 0.48 0.00 0.00 0.00 0.00 0.00 0.00 92.03 16时28分12秒 61 1.11 5.68 0.42 0.00 0.00 0.00 0.00 0.00 0.00 92.79 16时28分12秒 62 1.03 5.67 0.41 0.00 0.00 0.00 0.00 0.00 0.00 92.89 16时28分12秒 63 1.01 5.72 0.39 0.00 0.00 0.00 0.00 0.00 0.00 92.88 16时28分12秒 64 0.96 5.62 0.38 0.00 0.00 0.00 0.00 0.00 0.00 93.02 16时28分12秒 65 0.94 5.50 0.37 0.00 0.00 0.00 0.00 0.00 0.00 93.18 16时28分12秒 66 0.93 5.50 0.37 0.00 0.00 0.00 0.00 0.00 0.00 93.20 16时28分12秒 67 0.92 5.53 0.37 0.00 0.00 0.00 0.00 0.00 0.00 93.17 16时28分12秒 68 0.91 5.52 0.36 0.00 0.00 0.00 0.00 0.00 0.00 93.21 16时28分12秒 69 0.91 5.54 0.36 0.00 0.00 0.00 0.00 0.00 0.00 93.18 16时28分12秒 70 0.89 5.61 0.36 0.00 0.00 0.00 0.00 0.00 0.00 93.13 16时28分12秒 71 0.89 5.94 0.37 0.00 0.00 0.00 0.00 0.00 0.00 92.79

####单项查看

$ grep 'physical id' /proc/cpuinfo | awk -F: '{print $2 | "sort -un"}' | wc -l 2

一共有2个Socket。

- 查看每个

Socket包含的Processor个数:

$ grep 'physical id' /proc/cpuinfo | awk -F: '{print $2}' | sort | uniq -c 36 0 36 1

每个Socket有36个Processor。

cat /proc/cpuinfo | grep 'core' | sort -u core id : 0 core id : 1 core id : 10 core id : 11 core id : 16 core id : 17 core id : 18 core id : 19 core id : 2 core id : 20 core id : 24 core id : 25 core id : 26 core id : 27 core id : 3 core id : 4 core id : 8 core id : 9 cpu cores : 18

每个Socket包含18个Core(每个Core包含2个Processor)。

####结构分析